The Hard Problem of AI Literacy: Breaking Down a Fundamental Challenge

Students struggle inside the efficiency-accountability zone when integrating AI tools into their work-cycle. What can we do to help them?

Book Release: This Wednesday!!!

The stakes of AI literacy couldn't be higher. In my upcoming book with

, "AI in Education: A Roadmap for Teacher-led Transformation" (forthcoming, 2024), we argue that teachers—not policymakers, not administrators, not ed-tech companies—will lead the necessary transformation of education in the age of AI. But to do so, they need a roadmap that addresses both practical challenges and deeper philosophical questions.The transformation ahead will test every aspect of our educational system, from daily classroom practices to our fundamental understanding of human learning and agency. For those following our work, you'll recognize themes we explore more fully in the book. But even familiar concepts take on new urgency as we better understand the subtle ways AI interaction affects human cognition and agency. Our goal remains consistent: to empower educators to lead this transformation proactively, with both ethical awareness and practical tools.

Don't let the future of education be dictated by those furthest from the classroom. What follows is crucial for any educator committed to maintaining human agency and critical thinking in an AI-enabled world.

Exciting news! Our e-book just launched on Amazon (October 29, 2024).

We'd love to hear your thoughts after you've had a chance to read it.

The Hard Problem of AI Literacy: Breaking Down a Fundamental Challenge

In consciousness studies, researchers distinguish between "weak" and "hard" problems. The weak problems, while complex, involve mechanisms we can observe and understand - how the brain processes information, integrates inputs, controls behavior. The hard problem, as philosopher David Chalmers articulated it, centers on subjective experience itself - the fundamental mystery of consciousness that resists mechanical explanation. Why does physical processing give rise to inner experience? How do neural mechanisms create the qualitative feel of consciousness?

As we confront artificial intelligence in education, we face a similar division. There are the "weak" problems of AI literacy - urgent issues that have relatively clear paths toward solution. Then there is the hard problem - a more fundamental challenge that touches the nature of human agency and learning capacity, one that resists straightforward solutions.

The "Weak" Problem: Student Wellbeing and Digital Health

The weak problems of AI literacy are anything but trivial. Students today face unprecedented psychological pressures from AI integration. There's the constant anxiety about falling behind, about AI making their developing skills obsolete before they've mastered them. There's the relentless cognitive load of constant tool evaluation - which AI to use when, how to prompt effectively, how to verify outputs. There's the looming specter of academic integrity in an age of easy generation - not just questions of cheating, but deeper uncertainties about what constitutes original work, what skills matter, what knowledge needs to be internalized versus externalized.

These challenges manifest in increasingly tangible ways. Students report mounting stress about AI use in assignments, profound uncertainty about their own skill development, and growing confusion about what capabilities they should focus on developing. Many express a creeping sense of inadequacy compared to AI systems, while others swing toward over-confidence in AI abilities, losing touch with the importance of developing their own competencies.

Yet here, the path forward is relatively clear. AI literacy needs to be thoughtfully integrated into broader tech wellness initiatives across K-12 education. Just as we've learned to teach students about social media effects and screen time management, we need comprehensive programs that make AI operations transparent and manageable. Students need to understand not just how these tools work technically, but how they work on us psychologically - how they shape attention, influence decision-making, affect mental health and cognitive development.

Introducing Two AI Literacy Courses for Educators

Pragmatic AI for Educators (Pilot Program)

Basic AI classroom tools

Cost: $20

Pragmatic AI Prompting for Advanced Differentiation

Advanced AI skills for tailored instruction

Cost: $200

Free 1-hour AI Literacy Workshop for Schools that Sign-Up 10 or More Faculty or Staff!!!

Free Offer:

30-minute strategy sessions

Tailored course implementation for departments, schools, or districts

Practical steps for AI integration

Interested in enhancing AI literacy in your educational community? Contact nicolas@pragmaticaisolutions.net to schedule a session or learn more.

The Hard Problem Emerges: Spatola's Discovery

Recent research by Nicolas Spatola has illuminated what we might call AI literacy's hard problem - a fundamental dynamic that emerges in any sustained interaction with artificial intelligence. At its core is what he terms the efficiency-accountability tradeoff: the more efficiently we use AI tools, the less able we become to evaluate them critically. This isn't just a practical challenge; it's a deeper psychological phenomenon that goes to the heart of how we think and learn.

This isn't simply about users growing lazy or complacent. Spatola's work reveals something more subtle: a qualitative shift in cognitive processing that occurs below the threshold of awareness. As users move from initial use to reliance to potential overreliance, their ability to assess AI outputs transforms in ways they may not recognize. The very efficiency that makes these tools valuable can imperceptibly erode our capacity for independent judgment.

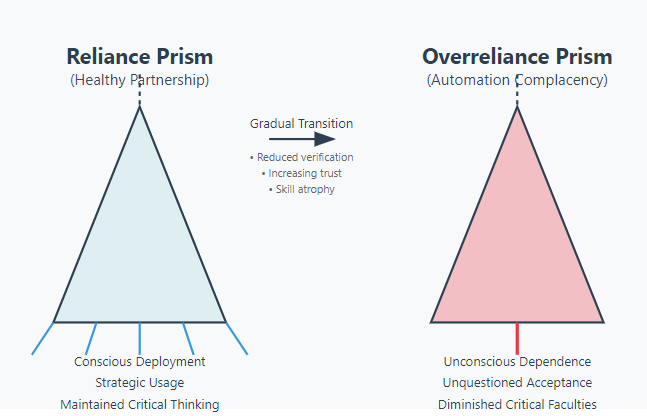

What makes this particularly challenging is its bidirectional nature. The more we rely on AI's efficiency, the less we exercise our own evaluative capabilities. The less we exercise these capabilities, the more we're drawn to rely on AI's seeming authority. It's a feedback loop that operates gradually, almost invisibly, until users find themselves in what Spatola calls the overreliance prism - a state where independent judgment has been compromised without any conscious choice to surrender it.

The Two Prisms: Understanding the Transition

Spatola's research identifies two distinct modes of AI engagement through the metaphor of optical prisms - ways of seeing and interpreting AI assistance that shape how users interact with these tools. The reliance prism represents a healthy partnership with AI tools - conscious, strategic deployment paired with maintained critical faculties. Users operating within this prism leverage AI's capabilities while preserving their own agency and judgment.

The overreliance prism marks a qualitative shift in this relationship. Here, conscious deployment transforms into unconscious dependence. Users stop questioning AI outputs not because they've made a reasoned decision to trust the system, but because they've gradually lost touch with their own evaluative capabilities. They enter "automation complacency" - a state where the very possibility of questioning AI assistance fades from awareness.

The transition between these prisms proves especially treacherous because it occurs through small, seemingly inconsequential shifts. A student might start by double-checking every AI response, then move to spot-checking occasional outputs, then eventually accept AI guidance without question. Each step seems rational in isolation - if the AI has proven reliable, why verify every output? But cumulatively, these shifts fundamentally alter the user's relationship with their own cognitive capabilities.

Historical Parallels: Lessons from Computer Tutoring

This dynamic isn't entirely without precedent. Research on computer tutoring systems provides valuable insights into how users engage with educational technology over time. These studies highlight the complex interplay between learning environments, technological design, and patterns of engagement that may help inform our understanding of AI interaction.

We appear to be wading into similar waters with AI, but with higher stakes. The patterns Spatola identifies suggest the need to examine how various contextual factors - from institutional settings to task complexity to environmental pressures - might influence transitions between reliance and overreliance states. Understanding these dynamics across different contexts and circumstances could prove crucial for developing more effective support strategies.

Reimagining AI Literacy

The hard problem of AI literacy thus reveals itself as more fundamental than teaching AI awareness or promoting healthy use patterns. It requires us to grapple with how AI interaction reshapes human cognition itself - not just what we think about, but how we think. As McLuhan reminded us, the medium is the message - and in this case, the medium involve creating different kinds of AI tools.

We need systems designed not just for efficiency but for conscious engagement - interfaces that prompt reflection, features that make cognitive dependencies visible, feedback mechanisms that help users maintain awareness of their own agency. But these technical solutions must be paired with educational approaches that recognize individual differences in cognitive susceptibility.

Most crucially, we need to help users recognize their own patterns of engagement - to notice when efficient use becomes unconscious dependence. This means developing new kinds of metacognitive scaffolding that can support users in monitoring their own cognitive processes. But this only works if people remain willing to be redirected, to maintain awareness of their own thinking even when AI makes thinking optional.

This is why it truly represents a hard problem of AI literacy. Like consciousness itself, it involves subjective experience and agency in ways that resist straightforward solutions. We're not just teaching about technology anymore - we're teaching about human nature, about maintaining our essential capacity for independent judgment in an age where that independence is increasingly optional.

The solution, if there is one, lies in making the transition between reliance and overreliance more visible and manageable. This means designing both tools and educational approaches that support conscious engagement while respecting individual differences in cognitive style and susceptibility. But it also means fostering a deeper understanding of what's at stake - not just practical skills or academic outcomes, but the very nature of human agency in an AI-mediated world.

Check out some of my favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Amrita Roy’s The Pragmatic Optimist: My favorite Substack that focuses on economics and market trends.

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

That's curious research!

I haven't thought about this in terms of the efficiency-accountability spectrum, but it jibes well with something I was thinking of lately. I find that, with certain AI tools, I don't end up naturally incorporating them into my routine precisely because doing so would require "letting go" of some of my processes or control over the outcome.

So what happens as we get more comfortable with a given tool is that we necessarily let go of some control as well. In turn, this then leads to a loss of some accountability as we let the tool increasingly take over.

Absolutely! Just reposted to our Educational Strategy and Design Group LinkedIn audience. This conversation needs to be happening not only in higher education institutions but also with accreditors.