"Could ChatGPT Do This Overnight?" If Yes, Redesign It.

Six filters for creating meaningful learning experiences in the age of AI

Join our nearly 9,000 subscribers by clicking below and becoming a subscriber. Consider becoming a paid subscriber to support the work of Educating AI.

A growing number of teachers are asking the right question: "If AI can complete this task in seconds, is it still worth assigning?"

It's not just a question about cheating. It's a signal that we're ready to rebuild instruction from the ground up, not to resist AI, but to create learning experiences where AI becomes a catalyst for deeper engagement rather than a shortcut around it.

We're not trying to outsmart machines. We're trying to design learning where AI can enhance the work without stealing away the thinking.

This article offers a practical toolset to do just that.

From Assignment to Experience

The moment students can type your assignment into a chatbot and get a finished response instantly, the learning value of that task is at risk. But this doesn't mean we need to throw out everything. It means we need a framework for upgrading our current work.

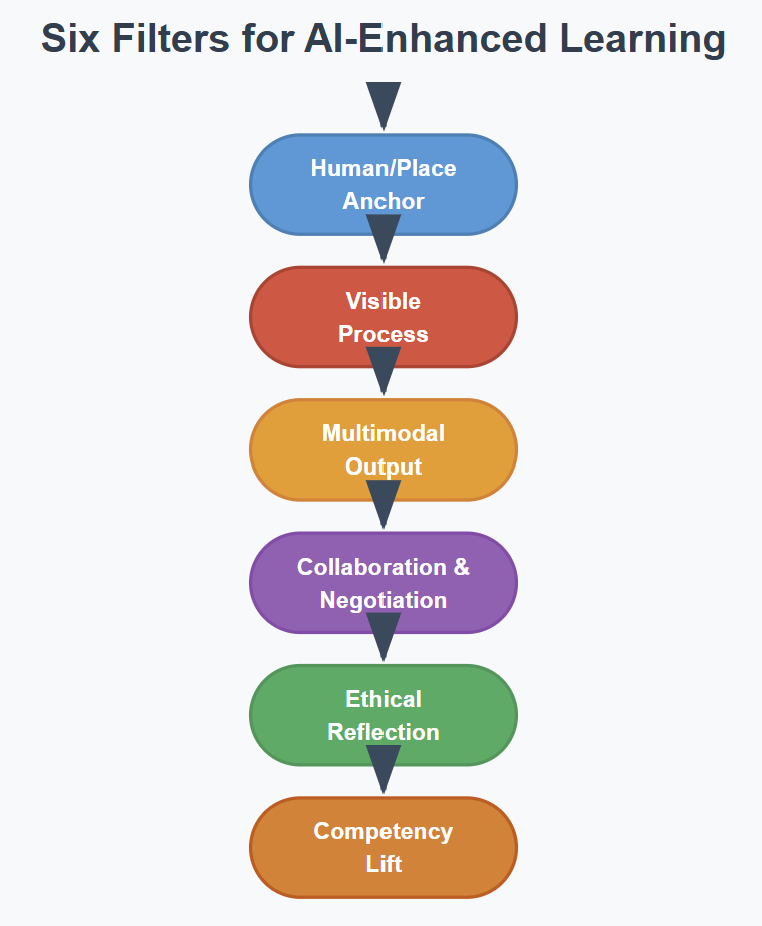

What follows is a six-filter redesign model to help teachers transform AI-vulnerable assignments into learning experiences where AI becomes a thinking partner rather than a replacement for student agency.

These six filters aren't limitations. They're creative prompts, each one pushing instruction toward the kind of sustained engagement where AI tools can amplify learning rather than bypass it.

The Six Filters of AI-Enhanced Learning

Use these filters to analyze a current task or build a new one from the ground up.

1. Human/Place Anchor Prompt: Does the task require connection to a real person, physical place, or lived experience?

When learning is grounded in direct experience, AI becomes a research tool and thinking partner rather than a content generator.

Example: Students investigating local water quality test samples from three nearby sources (school fountain, local creek, home tap). AI helps them research testing methods and interpret results, but the data comes from their own collection. They present findings to the school facilities manager with specific recommendations, including cost estimates AI helped them research.

Why it works: AI can enhance their investigation, but the core learning emerges from their direct engagement with place and people.

2. Visible Process Prompt: Can we see how students arrived at their conclusions, not just what they turned in?

When we design for transparency, AI becomes part of the documented thinking process rather than a hidden shortcut.

Example: For a persuasive essay about school policy, students submit three drafts with revision notes explaining each change. They document which AI suggestions they used, rejected, or modified, and why. The final portfolio includes their thinking process, not just the polished essay.

Why it works: The "thinking trail" shows how students used AI as a collaborator in their intellectual development.

3. Multimodal Output Prompt: Are students communicating their ideas through more than one mode: visual, oral, physical, or digital?

When students work across formats, AI becomes one tool among many rather than the primary creator.

Example: Instead of a traditional book report, students create a 3-minute book trailer that includes their own filmed scenes, AI-generated background music, and a live pitch to younger students during lunch. The format requires them to distill key themes while creating something genuinely engaging.

Why it works: The format variety creates natural opportunities for AI collaboration without AI domination.

4. Collaboration & Negotiation Prompt: Does the work require students to co-create meaning, build consensus, or respond to each other in real time?

Human collaboration creates the kind of dynamic thinking that benefits from AI input without being replaced by it.

Example: Groups plan the school's Earth Day assembly with a $200 budget. AI helps with research and logistics, but groups must negotiate with each other for time slots, coordinate shared resources, and adapt when the gym is double-booked. Real constraints force real problem-solving.

Why it works: AI can inform the conversation, but the learning emerges through human interaction and real-time thinking.

5. Ethical Reflection Prompt: Does the task include space to reflect on the ethical, social, or emotional implications of the topic or of using AI itself?

Ethical reasoning creates natural space for students to engage with AI as a subject of inquiry rather than just a productivity tool.

Example: Students design an anti-bullying campaign for middle schoolers. After using AI to generate poster concepts, they interview actual middle school students about what would feel authentic versus preachy, then revise their approach based on this feedback and reflect on assumptions they initially made.

Why it works: AI can provide information and perspectives, but students must grapple with values, make judgments, and take stands.

6. Competency Lift Prompt: Does the task strengthen a core human capacity students will need in an AI-enhanced world?

When tasks focus on developing essential thinking capacities, AI naturally becomes a tool for building rather than replacing these skills.

Consider these five essential human capacities:

Deep reading & source interrogation

Deep numeracy & quantitative thinking

Cultural & historical contextualization

Creative & systems thinking

Multimodal communication

Example: Students analyze why their cafeteria runs out of pizza every Tuesday. AI helps with data analysis and research into supply chain logistics, but students survey peers, interview cafeteria staff, track actual lunch line patterns for a week, and present actionable solutions to the nutrition director.

Why it works: AI supports the thinking work rather than doing it. Students develop the capacities they need to think critically with AI rather than defaulting to AI thinking.

Nick Potkalitsky, Ph.D.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: A cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s The Computerist Journal: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Jason Gulya’s The AI Edventure: An important exploration of cutting-edge innovations in AI-responsive curriculum and pedagogy.

I'm partnering with an administrator to use AI and emergent structures during his instructional coaching process. The idea involves a three legged stool which forms a partnership. The instructional coach, teacher, and students (3 legs) form a partnership. The purpose is to co-design a future (walk-through) as a partnership where each member's learning objectives are revealed through role-play. Trust, innovation, communal possibility, commitment and chosen accountability arise from emergent practices. Class members transition from competitors to collaborators.

The administrator goes From: Observer as knower, judge, and provider of feedback and points

To: Facilitator in partnership with teacher and students, as learners,

producers and designers.

Nick, great work here.

Heavily requesting you add a key step please: Must implore that all public school educators reflect on Step 3 - output, very closely.

Add a bit of step 5 right there with step 3, output - have your students answer these questions:

Prior to Gen AI tools, which professional (or professionals) would have made this output?

Do I want to have people making a living wage (or more) in this output that don't use these tools?

How do I support that?

Background: In my AI and Ethics class, we have curricular time and focus to examine when we keep using an AI tool for creative output and which professional (or professionals) might have done this output in 2021 {no public generative AI like Open AI rolled out in Nov 2022).

Next, the AI and Ethics course here specifically creates room to discuss, research, and write about the question itself within Postman's question of a Technopoly. Does this gen AI version of the output equal, detract, or add value to the output of people in that field prior to public gen AI tools? For instance, being able to quickly generate AI style Studio Ghibli art for a presentation - that has an impact in multiple ways.

No matter the educator's subject, if they are teaching 10, 11, 12th, educators must force this issue daily, with nuance, and evidence within their discipline. Asking these questions in 2025 help sustain a larger question about art, people, and value that quickly could shift if each individual student doesn't have the chance to consider the economic impact of step 3, repeated, transactionally outside of school...which is happening already, but would likely happen more if teachers are not sensitive to reducing artistry to "output".

For instance, I winced a bit from your example of outsourcing of music. I want my students to think about the artistry in the world around them, consider what they like, and mindfully consume and support good artistry. For example, one of my students who is now attending film school spent a night last March distressed about the amount of AI generated music in the Apple Music app. We took class time the next week to listen to, look at web presence, and then discuss positives and drawbacks of projects like "The Tommy Lasorda Experience" being positioned alongside people living day to day lives trying to promote their music. We then contacted that band via e-mail to ask how much of their content was AI generated, if that was a statement or parody, and thoughts they had about people scaling out with gen AI to fill up outlets like Apple Music, Pandora, etc.... rather quickly that band changed their web presence and started to identify that it was somewhat of a social experiment and parody of multiple things...and all AI generated, every bit. Never answered our e-mail though.

I'm cutting back on almost all gen AI output in creative areas within my classroom assignments. I hope a vast majority of public school teachers outside of art classes do as well. If each of my students thoroughly understands the economic shift imposed by larger tech companies on these areas of creativity --- outside of the their own tech tools.

This is a small business issue, an entrepreneurial issue, then a political issue, but also an issue of social appreciation outside of economic value.

Would like to hear your viewpoint, and if you agree, please at least add this perspective as an asterisk for step 3, if not maybe even consider an adjustment.