Educating for AI fluency: Managing cognitive bleed and AI dependency

A Guest Post by Nigel P. Daly

We at Educating AI empower over 4,000 education professionals - from classroom teachers to policy developers - to navigate the rapidly evolving landscape of AI in education. Through rigorous, independent journalism, we deliver in-depth investigations and analysis of AI learning tools that help educators, researchers, and parents make informed decisions. Our detailed coverage digs beneath the surface to examine how these technologies truly impact teaching and learning.

Support our mission by upgrading to a paid subscription. Your investment enables us to continue delivering thorough, critical analysis of the AI education ecosystem, helping shape better outcomes for students and educators alike. —Nick Potkalitsky, Ph.D.

Author Bio:

Nigel P. Daly has a PhD in TESOL (Teaching English to Speakers of Other Languages) and has been teaching Academic and Business English and communication skills in Taiwan for over 28 years. He is a business communications instructor at a government training center for university post-graduates and corporate clients. His research and publication interests cover a wide range of topics within the fields of EFL pedagogy, education technology, applied linguistics, and communication strategies. Since 2023, his focus has shifted to AI use in the classroom and AI workflows and literacy. His publications span SSCI academic journals, textbooks, dozens of newspaper articles and op-eds, and since mid-2024, two Substacks: one focuses on bilingual stories for learning English and Chinese, and the other is called Cognitive Bleed—a collection of essays, book reviews, and parables exploring the bleeding intersections of language and cognition in human and AI interaction.

Abstract

Generative AI tools like ChatGPT are revolutionizing how we work and learn, but they also come with risks—overreliance on AI can weaken critical thinking, creativity, and confidence. This article introduces the concept of cognitive bleed, the seamless integration of human and AI cognition, which can either enhance thinking or lead to cognitive atrophy. By framing AI fluency as an expanded form of communicative competence, the essay explores practical ways to train individuals to engage with AI thoughtfully by using structured workflows that balance human and AI input. Through real-world examples from business communication students in Taiwan, the article shows how AI can be a powerful ally in language learning and communication, if managed wisely. Instead of resisting AI, there are even times when a controlled dependence can be appropriate. But this will depend on the needs, proficiency, and time frames of the learners, and of course, keeping the mindset of using AI to enhance human ability rather than replace it.

Introduction

“AI tools helped me a lot… even changed my life,” said Chloe, one of my Taiwanese business communications students, reflecting on her use of genAI tools like ChatGPT. She credits them with boosting her English skills, immediately raising her IELTS (International English Language Testing System) 6.5/9 English writing ability to a 9/9.

For Chloe, learning AI opened career doors and she currently uses it over 20 hours a week in her marketing job. But there’s a catch: “I think I overdo it. I’ve started to feel less confident in my own English ability.”

Like many genAI users, Chloe became reliant on AI. Perhaps even over-reliant on it.

This article …

talks about the AI dependency and the problem of cognitive atrophy

goes into the cognitive and linguistic framework of the solution, which is AI fluency

briefly describes implementing AI fluency training with workflows and a structured hybrid human-AI writing assignment

shows a sample from a student, and

explains how my teaching and expectations have changed since using ChatGPT.

AI dependence and cognitive atrophy

AI tools like ChatGPT are excellent tools for enhancing efficiency and offering personalized experiences to improve our learning, working and day-to-day to lives. But their ease-of-use for any cognitive task has a dark side: AI dependence. The pervasive use of AI means habitual cognitive offloading, and this can lead to Chloe’s loss of confidence and even cognitive atrophy, like reduced critical thinking, analytical skills, and creativity (Dergaa et al., 2024).

Cognitive atrophy can be explained by the brain's "use it or lose it" principle, where underused cognitive pathways weaken over time (Shors et al., 2012). This is increasingly becoming the norm for many workers and students who use AI to handle complex tasks like problem-solving and decision-making.

The personalized and dynamic nature of genAI interactions marks an evolutionary leap in technology—it is the first general purpose technology (GPT) that literally speaks our language. Because of this, genAI can directly and immediately scaffold, support and infiltrate all forms of thinking, from text to math, from image to coding.

The convenience, immediacy, and personalization of AI can easily foster a dependency that can reduce mental engagement, shorten attention spans, weaken memory recall, and diminish capacity for independent thought.

Research shows that overreliance on AI tools leads to reduced critical thinking skills and an increase in cognitive offloading, where individuals increasingly delegate mental tasks to AI systems. This dependency creates a feedback loop that diminishes the user's ability to engage deeply with information and solve problems independently.

Gerlich (2025) has shown that those most at risk are younger individuals, as they exhibit higher dependence on and faith in AI tools, and people with lower educational attainment, who may lack the training to critically evaluate AI-generated information and maintain cognitive engagement.

We need to educate and train our students (and ourselves!) in AI fluency to prevent declines in cognitive ability and confidence. This means that we as educators and users of AI tools need to recognize genAI tools are unlike any other we have used before: the AI-human interaction intimately interfaces with our cognitive and linguistic systems and processes. Generative AI and Large Language models (LLMs) are a double-edged AI sword that cuts—and bleeds—both ways. This is cognitive bleed.

Cognitive bleed—Human-AI interfacing

Cognitive bleed is the fluid integration of human cognition and generative AI systems that is mediated primarily through language (see here for a more detailed framework). It can be either good or bad: a blood transfusion that enhances thinking ability or a hemorrhaging that diminishes thinking agency.

The cognitive bleed interface can be described as System 0 thinking—a process of human and AI collaborative cognition experienced on a continuum from the conscious to non-conscious. Chiriatti et al. (2024) recently proposed System 0 as an AI interface with what Daniel Kahneman (2012) calls the brains 2 thinking systems: System 1 (intuitive, fast thinking) and brain System 2 (reflective, slow reasoning). This interface works like a bridge that enables humans to fluidly incorporate AI-generated insights into intuitive and reflective processes. GenAI tools thus differ from other physical or technological tools in the intimate and real time impact they have on cognitive processes. They become an active component of cognition.

Figure 1. AI as a System 0 cognitive foundation for System 1 and 2 thinking (created with Canva).

At their cores, both human and generative AI cognition are based on predictive mechanisms. Karl Friston’s (2010) predictive brain model is therefore useful for understanding collaborative human-AI cognition. At System 0, humans and AI minimize prediction error through iterative interaction: humans use AI to help form or test predictions while AI needs human input to generate predictions based on language probabilities from its training data set.

When cognitive bleed is positive, AI precision and computational capacity blends with human contextual grounding and competent evaluation. Here competent evaluation is less about being tech savvy than it is about having knowledge, wisdom, critical thinking—which is why young people are typically not the most competent users of AI (again, see Gerlich, 2025).

In this framework, language is the "blood" of cognitive bleed. Ideally, it flows bidirectionally in interactions between humans and AI to facilitate the smooth exchange of human and AI strengths and compensations. In the context of applied linguistics, this interaction can be seen as a new kind of communicative competence where humans interact with and strategically integrate AI as a controlled component of their communicative repertoire.

A model of AI fluency as an expanded communicative competence

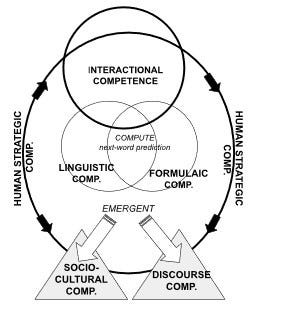

To better situate these interactional and strategic competences within a broader framework of communication ability, Celce-Murcia’s (2007) communicative competence model provides a useful dynamic framework. Her model comprises the different components to understand effective language use—for both humans and LLMs. It highlights how various interrelated components—linguistic, sociocultural, discourse, formulaic, interactional, and strategic competences—contribute to effective language use and communication:

Linguistic Competence is the mastery of grammar, vocabulary, phonology, and syntax.

Sociocultural Competence is the understanding of social norms, cultural references, and context to ensure appropriate language use

Discourse Competence is the ability to connect ideas cohesively and coherently in extended speech or writing, like knowing to structure a wedding toast or 5-paragraph essay,

Formulaic Competence is the familiarity with fixed expressions, idioms, and routines used in communication,

Interactional Competence is the ability to manage conversations, including turn-taking, signaling understanding, and negotiating meaning,

Strategic Competence is about employing communication strategies to overcome gaps in knowledge of any of the above competences, like using body language or environmental cues or affordances to clarify intent or repair misunderstandings.

Humans develop these skills through embodied social experiences to enable real-time adaptation to diverse social and cultural contexts. While Linguistic and Formulaic competences of mother tongues tend to be mastered across childhood, the ability to know how to use correct words, grammar and phrases is not enough to communicate effectively. For example, Sociocultural and Interactional competences, which take longer to develop, allow humans to appropriately use language to navigate ambiguous or nuanced situations among strangers, friends, coworkers and superiors. Discourse competence is placed at the center of this model as a communication structuring competence, such as knowing how to structure an invitation to a party or a business email of enquiry. Finally, humans exhibit Strategic competence by using communication strategies like rephrasing or repetition to compensate for lacks in their other competences.

Figure 2. HUMAN model of communicative competence (Celce-Murcia, 2007; created with Google Drawings).

By contrast, large language models (LLMs) excel in Linguistic and Formulaic competences because they are trained on datasets containing trillions of words/tokens that allow them to assign probabilistic weightings to linguistic patterns and fixed expressions. This means that LLMs take the user’s prompt input as a starting point and exclusively draw on immense data and computational power to generate highly precise word, phrase and grammar outputs; this grammar ability is not mystical insofar as it is an emergent property and basically a function of patterns of words and word-parts. What’s interesting (and indeed a little mystical) is that even with RAG and inference abilities, LLM models are still essentially probabilistic next-word predictors based on their training data but can nonetheless give rise to emergent, higher-order linguistic structures, such as Sociolinguistic forms of language register or formality and coherent Discourse-level structures like essays and stories. In this sense, LLM Sociocultural and Discourse competences are bottom-up emergent competences. And since LLM chatbots are trained and fine-tuned to be useful for human users, they are especially good at using language to reply to human user prompts. In other words, given their impressive ability to process and generate language, LLMs have a strong Interactive competence—albeit one-way and only in the passive role as responder. (Though will soon change with more agentic versions of LLMs.) Finally, since the quality of AI output depends on the intentionality, expertise, and context-awareness of the human user who is interacting with it, (current) LLMs have no Strategic competence; or more precisely, it is the human who becomes the LLM’s surrogate strategic competence.

Figure 3. LLM communicative competence (created with Google Drawings).

So, while LLMs can produce discourse that appears coherent, they are by themselves lifeless—like a virus that needs a host for life. LLMs lack the goal-directed intentionality and context-dependent evaluative ability that humans provide to give it life. In this way, the AI System 0 is like a (language) virus requiring a human (language) host. In this complementary dynamic of cognitive bleed, humans contribute depth, context, and oversight, while LLMs enhance communicative fluency, structure, and formulaic accuracy. Together, this partnership can form a hybrid communicative system that leverages the strengths of both human and AI capacities to achieve more effective and nuanced thinking and communication.

In this hybrid system, AI thus serves as a strategic competence to help humans make predictions and overcome language or conceptual limitations by producing structured, coherent outputs and processing vast data. Conversely, the competent AI human user has the knowledge and communicative competence to formulate predictions (or sprouts of predictions) in the form of a prompt and then evaluate the AI output using the contextual knowledge and intent that AI lacks. The human needs to start, refine and finish the interaction. The human needs to manage the cognitive bleed. This is AI fluency.

Figure 4. Cognitive bleed model: Where human and AI language models merge (System 0) (created with Google Drawings).

Training AI fluency

Given the iterative and conversational nature of human-AI exchanges, I prefer the term AI fluency as arguably more fitting than AI literacy at capturing the interactive, dynamic, and linguistic nature of using AI and managing cognitive bleed.

Teachers and trainers will play a critical role in educating students and workers to develop AI fluency. This first requires an understanding that some tasks are better done independently, and others done with AI. Even after working over six months in her new marketing job, Chloe is entering “another part of AI learning—when not to use it”. For her, this means writing “short emails or messages myself more often and deliberately avoid[ing] AI.”

For us educators, this suggests AI training with a rational and first principles cognitive workflow that keeps the human in the loop and prioritizes human creativity, critical thinking, and independence when using AI. For example, I teach my students the acronym PAIRR to describe a generic workflow that clearly outlines the human contribution to the human-AI pairing:

P – Plan what you need, why you need it, and how to get it

A – Ask with a prompt

I – Investigate the AI output

RR – Revise output/prompt and Reiterate process if need be.

In this iterative AI workflow, genAI should be thought of as a sycophantic yet knowledgeable “intern”. This is a chatbot after all and is best used as a chatting partner to develop ideas and test predictions in a turn-taking conversation. And because it is happy to serve you and will do or say almost anything to please you, you need to be wary and give it enough information so that it gives a detailed answer. The PAIRR interaction thus starts with “P”, or careful planning and thinking about workflow—what is the human contribution and what’s the AI’s? This also presupposes a kind of analytic skill of knowing the knowledge you want and its components. In many ways this is like making predictions/hypotheses and testing them or using AI to help you make predictions if you are totally unsure. When you know what you want and what your role is, then it’s time for “A”, or asking AI with a prompt to test the hypothesis or what is expected. The next stage is “I” or investigating the AI output. This means reflecting on how it matches the prediction and/or evaluating its usefulness. Finally, it’s time to revise the output and/or reiterate the process, if necessary, by going back to the Plan phase. Prediction error, surprise, and/or curiosity will guide this process.

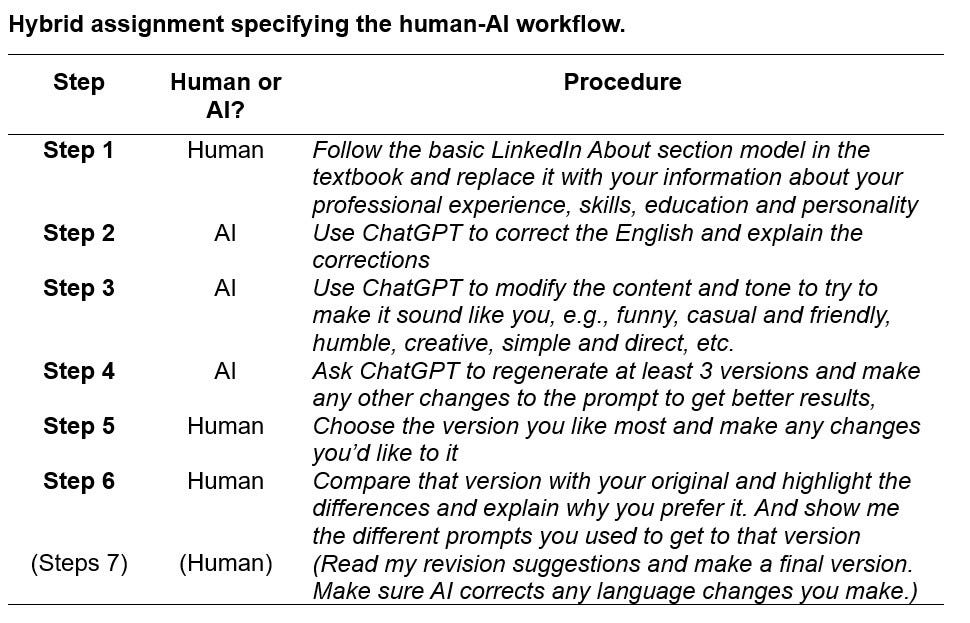

In my classes, I give my students an AI policy that encourages use of AI tools as long they tell me how they used them. As part of their AI training, one of my foundational AI assignments for my business communications students is to write a LinkedIn About section assignment that follows a PAIRR sequence but adds another R at the end—Reflection. This hybrid Human-AI assignment ends in Step 6 with a reflection task that tells me how and why they revised their original version with AI content and language.

This hybrid assignment serves as a model for future assignments in that it requires that students

Write their own first draft (Step 1),

With AI, correct their English (Step 2),

With AI, generate more ideas to make the first draft sound more like them or how they want to sound (Steps 3-4),

Revise first draft with AI suggestions to submit to teacher (Step 5), and

Reflect on and explain AI suggestions that were used (Step 6).

In my English as a Foreign Language business communications course, the purpose of this assignment is to raise both metacognitive and metalinguistic awareness of their use of AI and what language they learned as a result. Students compare their original version with AI versions to become aware of differences in writing quality, content, structure, logic, and lexical and grammatical errors. This metalinguistic reflection is part of the graded assignment. As for my teaching role, as a business communications and language teacher, AI becomes a writing tool that provides lower-order language feedback which in turn allows me to give teacher feedback on higher order writing issues, such as coherence, priority and order of information, voice, tone, and style.

Student example: Reflecting on AI use

Here are some excerpts from Aria’s (not her real name) original and final drafts for her LinkedIn About assignment. She compares how she revised her original human-written version with ChatGPT revisions to create a voice, tone, and style she wanted to convey to recruiters who may read her LinkedIn profile. I highlighted the added phrases that ChatGPT created that made the tone have more “humility” as Aria specified in the prompt.

As the final part of the assignment (Step 6), Aria wrote a full, single-spaced page, but here is a sample comment she added to describe the last paragraph in the above table:

“I tried to be humble to be connected with people, so I chose to use the words

‘honored to connect’ and ‘learn more about your opportunities’. In addition, I’m still showing my passion at the end, so I used words like ‘eager’, ‘passion’, and ‘drive’.”

ChatGPT boosted Aria’s Linguistic and Formulaic competences and gave her the selection of language that aligned with the desired tone she wanted to convey. And since ChatGPT usually excels in matching appropriate language (vocabulary, phrases, and grammar) to a specified text genre, Aria’s Sociolinguistic competence was also boosted.

As for me, the teacher, after I received this assignment, I did not have to correct any language mistakes. This meant I was able to focus on issues to improve the style and coherence, recommending different word choices, pointing out repetition, and clarifying certain ideas. This co-creating of Aria’s LinkedIn About section resembles Godwin-Jones’s (2022) vision of learners and teachers “co-creating with algorithmic systems”; though I would prefer to recognize the key role of genAI in the learner workflow process in more ecological terms: the combination of the learner, genAI, and teacher becomes a “co-creating algorithmic system”.

Shifting language teaching in an AI age

Perhaps the critical questions in K-12, university, and corporate AI training are who needs AI, what tasks require it, and when and how should it be delivered?

For me in Taiwan teaching university graduates and corporate staff, I have come to the pragmatic conclusion that it is ok to let my students become dependent on AI to raise their English writing ability to an IELTS 9/9. As long as it is a controlled dependence.

Not all forms of dependence are necessarily bad. For most of my students, using ChatGPT as a System 0 language appendage is better than the alternative—never advancing their English. Never advancing their careers in international business or research. Never advancing their interests and hobbies in international contexts.

The truth is that AI can democratize English as an international language and level the playing field in areas from education to business to entertainment for billions of people who did not have the good fortune to grow up with English as a first language or in a country where English is the official language. For my students who are already adults, it is unlikely any of them will have the time, financial resources or desire to perfect their English language ability. So, for those who will need English for communications in English in their professional or private lives, they are better off using ChatGPT-like tools to make their English as accurate as possible.

However, there is a crucially important caveat: we need to teach them controlled dependence and empowering workflows that enable them to be in control AI and be able to evaluate AI outcomes. In other words, to make people like Chloe aware of their limitations while increasing their AI fluency and instilling confidence in their use of AI.

Because of this, my teaching focus (and expectation) has now shifted to helping students understand how to structure communications for readability and persuasion. They should still learn what discourse or rhetorical structures and templates facilitate clear communication for emails, reports, CVs, presentations, and negotiations. They should still gain a sociocultural awareness of formality, voice and tone. That is, they should acquire this kind of declarative/theoretical knowledge about language as a pragmatic replacement for the procedural knowledge (mastery of language) that my students will never be able to attain.

Many foreign language students will never come close to mastering the linguistic and formulaic aspects of language. That’s why AI dependence can be good, in a controlled way. So, by improving their interactive competence (prompting skills) with AI and drawing on AI’s language mastery as a strategic competence, I can help my students supplement other communicative competences that are more within their reach. By helping them acquire the theoretical knowledge of the discourse and sociocultural aspects of communication, they will be able to better prompt AI and evaluate its response. My hope is that when they leave my classroom, they will be able to continue running the “co-creating algorithmic system”, just minus the teacher.

Although these speculations need to be tested over time, this seems to be the most efficient or realistic route to increasing AI fluency for many foreign language learners. And to managing the cognitive bleed over the long haul.

References

Celce-Murcia, M. (2007). Rethinking the role of communicative competence in language teaching. Intercultural language use and language learning, 41-57.

Chiriatti, M., Ganapini, M., Panai, E., Ubiali, M., & Riva, G. (2024). The case for human–AI interaction as system 0 thinking. Nature Human Behaviour, 8(10), 1829-1830.

Daly, N. P. (2024). Cognitive bleed: Towards a multidisciplinary mapping of AI fluency. Paper presented at AI Forum, Ming Chuan University, Taipei, November 16,2024.

Dergaa, I., et al. (2024). From tools to threats: a reflection on the impact of artificial-intelligence chatbots on cognitive health. Frontiers in Psychology, 15.

Friston, K. (2010). The free-energy principle: a unified brain theory? Nature reviews neuroscience, 11(2), 127-138.

Gerlich, M. (2025). AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking. Societies, 15(1).

Godwin-Jones, R. (2022). Partnering with AI: Intelligent writing assistance and instructed language learning.

Kahneman, D. (2012). Two systems in the mind. Bulletin of the American Academy of Arts and Sciences, 65(2), 55-59.

Shors, T. J., et al. (2012). Use it or lose it: how neurogenesis keeps the brain fit for learning. Behavioral Brain Research, 227,450–458.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

Really thoughtful and insightful article sir. Congratulations on the framework presented, which puts the langue models in perspective as tools for deeper thinking.

I’ve been seeing a lot of studies on EFL and AI as I search the university library database and on ResearchGate. Your work is incredible, Nigel. You are so right about wisdom and expertise, and communicative competence. The heightened awareness of how AI can limit as well as extend a person vis a vis cognitive offload/full engagement is I suspect a core teaching objective. This is so cool. The work you are doing is pushing us forward. Thanks!