Neurons to Networks: Bridging Human Cognition and Artificial Intelligence, Part 1

From the Human Brain to the Search for Intelligent Machines (A Collaboration with Alejandro Piad Morffis)

From Information to Knowledge

Greetings, Educating AI Community!!!

In 2024, Educating AI will continue unveiling its a novel approach to seamlessly blend AI technologies into today’s writing classrooms, emphasizing the pivotal distinction between “information” and “knowledge.”

This method bridges the fields of literacy and computational studies. Here, "information" embodies dual roles: it is both the unprocessed data and facts addressing the questions of who, what, when, and where, and the foundational element in computing and digital systems.

Concurrently, "knowledge" represents not only processed and comprehended information that provides context and deeper understanding, answering the 'how' and 'why,' but also advanced models capable of adapting to novel problem types in entirely new areas, without the need for additional training.

The AI-responsive writing curriculum proposed above–one that recognizes and embraces the compositional and computational resonances of the foundational terms/concepts of “information” and “knowledge”--will require a broader, multi-disciplinary approach.

Building the Educating AI Network

For this reason, I will be collaborating throughout 2024 with teachers, parents, students, rhetoricians, rhetoricians, parents, designers, and computer scientists as I develop this innovative instructional program. As I survey AI-writing curriculum currently available online and elsewhere, these offerings seem quite narrow to me.

Educators on the whole are still caught up in the debate about whether to let students use AI, when the conversation desperately needs to expand to a full consideration of the skills, competencies, and literacies students need in order to make effective and ethical decisions about AI use in particular contexts.

I readily admit that I as an individual educator do not have all the answers, but in my short-time on Substack, I have built up a considerable network of collaborators and colleagues who can collectively bring you the most up-to-date, creative, effective techniques and strategies as we here at Educating AI build something a curriculum to meet the challenges and embracing the promises of Gen AI.

1st Collaboration Series of 2024

In Educating AI’s first collaborative series of 2024, we are building the theoretical foundation for an innovative AI-responsive writing curriculum by first exploring:

(1) The nature of the physical apparatus that drives learning in the human and AI context

(2) The nature of learning itself within human and AI context

(3) The AI distinction between symbolism and connectionism and why it matters to computer science and education more generally.

In brief, our goal here is not to provide long-winded encyclopedic analyses, but pointed comparison/contrasts with the explicit goal of refining instructional approaches and practices.

Remember that we are building a foundation here. [For more in-depth analysis, please check out Suzi’s new Substack When Life Gives You AI. She writes as the intersection between neuroscience and computational theory, and is seeking to answer many of the most complex and foundational questions about the nature of consciousness and AI. Another amazing resource is Daniel Bashir’s ongoing interview series at The Gradient podcast where he regularly discusses these issues with thought-leaders in the fields of cognitive science, philosophy, linguistics, engineering, and machine learning. We have a wealth of resources on Substack. Our only limitation is time.]

The goal is to distinguish between the knowledge-constituting learning process of the human brain and the data-generating learning processes of AI system, and to discover methods for maximizing the hybrid spaces that emerge when humans interact with AI in a variety of different context, such as code generation, writing, artistic creation, musical expression, and editing/formatting/publishing.

While Educating AI will predominantly focus on the hybrid skills of writing and composition, I want readers to realize that the general principles will be transferable to a wide-range of domains and disciplines.

Alejandro Piad Morffis

In Alejandro's repertoire is astounding: a highly accomplished computer science professor, a deft machine learning engineer, a visionary entrepreneur, a creative science fiction author, and an adept online community builder. But more than his professional accolades, it's the genuine humanity he brings to his endeavors that I find most admirable. His work is infused with an infectious energy and unwavering integrity.

I eagerly anticipate his insightful contributions on his various Substack platforms. His writings are a testament to his diverse experiences, profound knowledge, and philosophical depth. Unlike many in the tech domain, Alejandro's content stands the test of time beautifully. Here are links to Alejandro’s most prominent Substacks. I encourage my readers to dive in and explore.

Now without further ado: the nature of the physical apparatus that drives learning in the human and AI context.

Neurons to Networks: Bridging Human Cognition and Artificial Intelligence, Part 1

Here are the highlights.

In this post, we will cover Parts 1 and 2. Look out for the Parts 3 and 4 in the next installment of Educating Ai.

Section 1:

Explores the brain's complexity, presenting it as a sophisticated organ essential for learning, underpinned by neurological, cognitive, and behavioral theories.

Section 2:

Chronicles the history and development of AI, detailing the transition from early computational theories to the advanced capabilities of modern Large Language Models.

Section 3:

Discusses the nuances and limitations of comparing artificial neural networks to the human brain, emphasizing the distinct yet complementary nature of human and AI intelligence.

Section 4:

Suggests a strategic approach for integrating AI into education, focusing on empowering teachers and students to leverage AI for enhancing knowledge generation and information processing.

Section 1: The Genius of the Human Brain

The Brain: Our Ultimate Learning Tool

At the heart of human learning lies our most sophisticated organ - the brain, intertwined with a complex nervous system. My perspective as an educational theorist and practitioner is grounded at the crossroads of neurological, cognitive, and behavioral learning theories and their instructional applications. A thorough and precise comprehension of the brain's intricate workings is vital - serving as a cornerstone for both philosophical musings and the practical crafting of educational methods.

The Brain’s Four Main Components

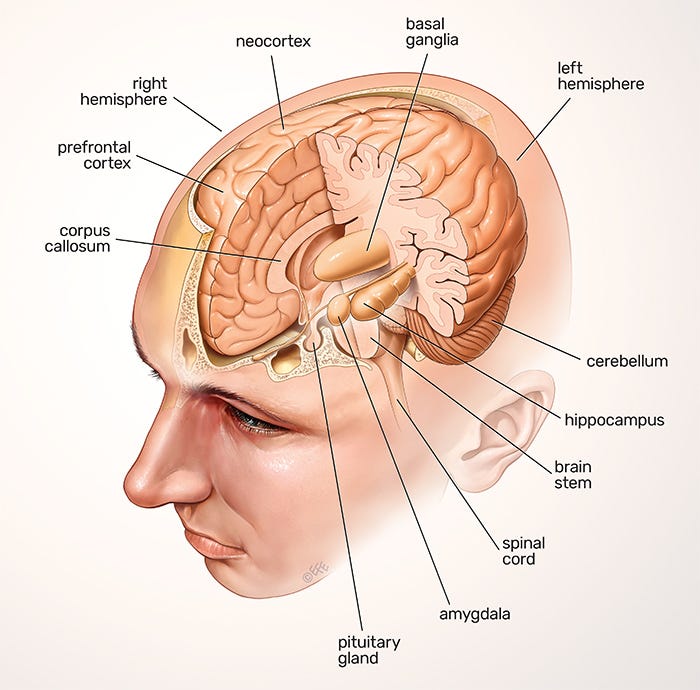

Source: The University of Queensland

Andrew Johnson eloquently termed the brain as "our learning organ." It's crucial to view the brain as a system comprising interrelated parts that collectively form larger systems of function, response, and control. Our focus here will be on four primary brain regions: the brainstem, the cerebellum, the cerebrum, and the cerebral cortex.

The Brainstem: Our Primal Navigator

The brainstem, the most ancient segment of the brain, connects directly to the spinal cord and the broader nervous system. Often referred to as the reptilian or lower brain, its roles are fundamental, overseeing vital life-sustaining functions and automatic bodily responses that require no conscious thought. It's an essential part of our neurological toolkit.

The Cerebellum: The Conductor of Movement and Cognition

Despite its small size, the cerebellum is crucial for movement coordination, processing inputs from various brain regions, the spinal cord, and sensory systems to harmonize muscular and skeletal actions. Recent discoveries have illuminated its significant role in cognitive functions, affirming the theories of cognitive linguist George Lakoff and philosopher Mark Johnson about the interplay between spatial routines and higher cognitive processes, including metaphorical thinking.

The Cerebrum: The Seat of Complexity

Forming the bulk of the brain, the cerebrum is split into two hemispheres, linked by the corpus callosum. This region is the command center for higher-level functions like sensory interpretation, voluntary movement, cognitive skills (reasoning, problem-solving, planning), language, and emotional and behavioral regulation. The left hemisphere generally manages language and logic, while the right is more involved in spatial and creative tasks. This division underscores our advanced intellectual and analytical capabilities, from simple coordination to elaborate thought processes.

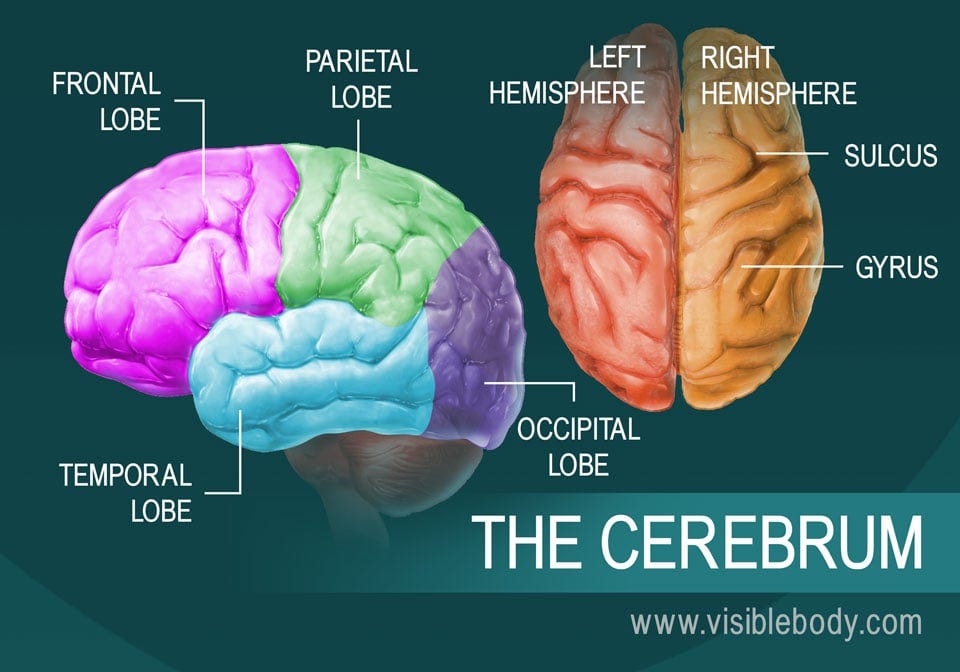

Source: The Visible Body

The Cerebral Cortex: The Epicenter of High-Level Functioning

Encasing the cerebrum is the cerebral cortex, recognized for its pivotal role in advanced brain functions and its unique gray matter. Its ridged, folded surface amplifies the area for dense neural networks essential for sophisticated processing. It comprises five lobes, each with specific functions:

Frontal lobe: decision-making, planning

Parietal lobe: sensory interpretation, spatial awareness

Occipital lobe: visual processing

Temporal lobe: language, memory, auditory processing

Insular lobe: emotional response, homeostatic regulation

The cortex is instrumental in cognitive processes, motor control, language, and memory, exemplifying the brain's evolutionary triumph in handling intricate tasks and information.

The Brain as an Integrated System

A key marvel of the brain is how its parts coordinate to form what neuroscientists call “an integrated whole.” On a macro level, the corpus callosum connects the hemispheres, facilitating communication between them. Contrary to popular belief, recent studies have debunked the idea of dominant hemispheres correlating with specific learning styles. On a micro level, the integration involves complex neuronal and synaptic interactions, showcasing the brain's remarkable neuroplasticity.

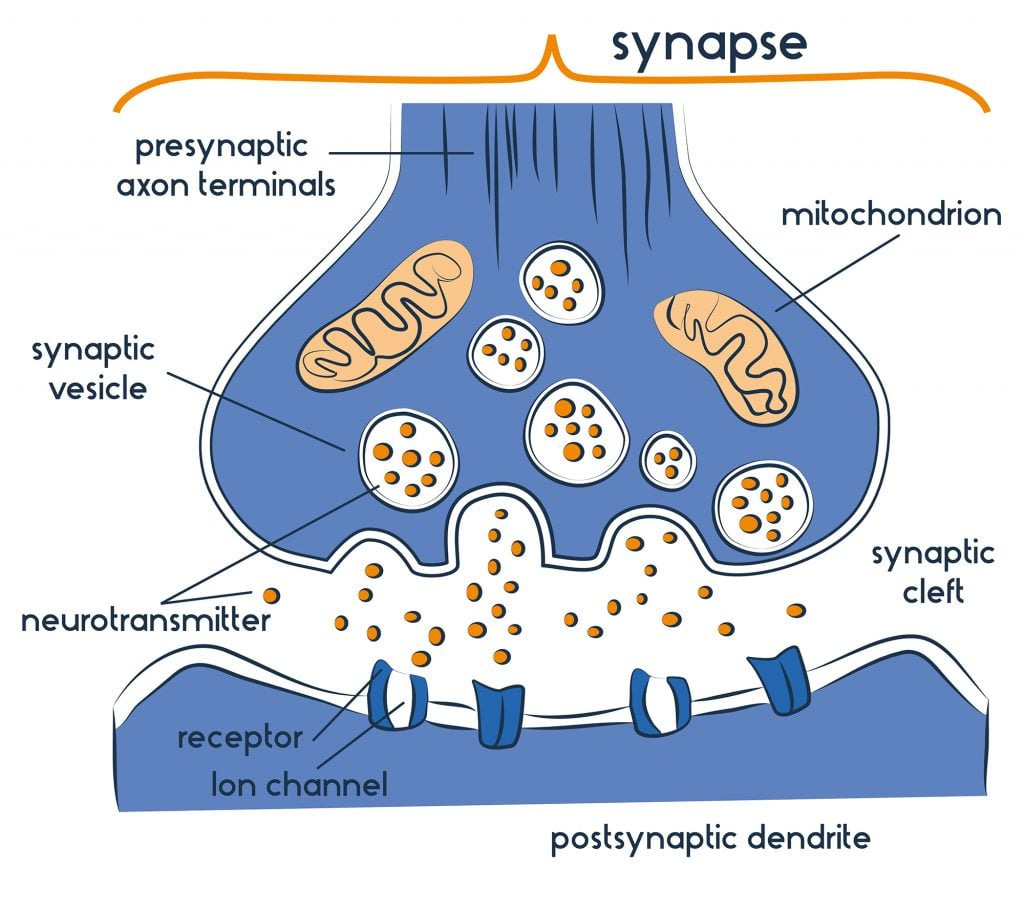

Building Neural Networks

Neurons, particularly abundant in the cerebrum, are the brain's elegant building blocks. Each neuron consists of:

Cell body: the metabolic hub containing the nucleus

Dendrites: receiving signals from other neurons

Axon: transmitting electrical impulses

Myelin sheath: enhancing transmission speed

Neurons connect at synapses, where electrical signals trigger chemical releases, continuing the signal across neurons. This binary nature of neural transmission has drawn parallels between brain functioning and computing.

Source: Simple Psychology

The Two-Way Information Flow

The brain operates through a sophisticated relay system for processing new sensory data. Sensory organs send signals to the thalamus, the central relay station. However, the brain doesn’t rely solely on this "bottom-up" approach. A "top-down" process also occurs, where the cortex sends information back to the thalamus, facilitating efficient pattern recognition and sensory interpretation. Brain-based educational theorist Andrew J. Johnson notes the significant downward flow of information from the cortex to the thalamus (90% top vs. 10% bottom).

Final Thoughts: The Brain's Role in Perception and Learning

Our reality perception is shaped by past experiences, which vary for each individual, leading to unique worldviews. Interaction with our environment not only triggers responses but also fosters the formation of new neural pathways and networks. Learning, therefore, is a transformative process that reshapes brain functionality.

Section 2: The Road to Artificial Intelligence

The Search for Intelligent Machines

The concept of designing intelligent machines by drawing parallels with the workings of the brain has a lengthy historical background. The first reference to the potential of intelligent machines in the context of computation is attributed to Lady Ada Lovelace in her renowned paper, describing Charles Babbage's analytical engine. This machine, which was never realized, was capable of universal computation, analogous to a Turing machine. Lady Lovelace speculates in her paper that the machine could tackle complex problems, such as prime numbers and intricate computations, and even introduces the concept of subroutine calling. Despite her recognition of the machine's capabilities, she asserts that it could never possess creativity or imagination, characteristics unique to humans. Lady Lovelace's perspective is influenced by the romantic worldview prevalent at the time, which upheld the supremacy of human intellect over that of any machine.

Source: “Ada Lovelace,” Getty Images

The Birth of Cybernetics

During the 1930s and 1940s, cybernetics emerged as researchers, including Robert Weiner and others, delved into studying the functioning of neurons in the brain and developing mathematical models of neuron activation and systems with intricate feedback loops. This exploration led to the formation of the field of cybernetics, initially interconnected with robotics and artificial intelligence but eventually diverging. The study revealed that simple components interconnected with feedback loops could give rise to complex behaviors, laying the foundation for the concept of emergent behavior.

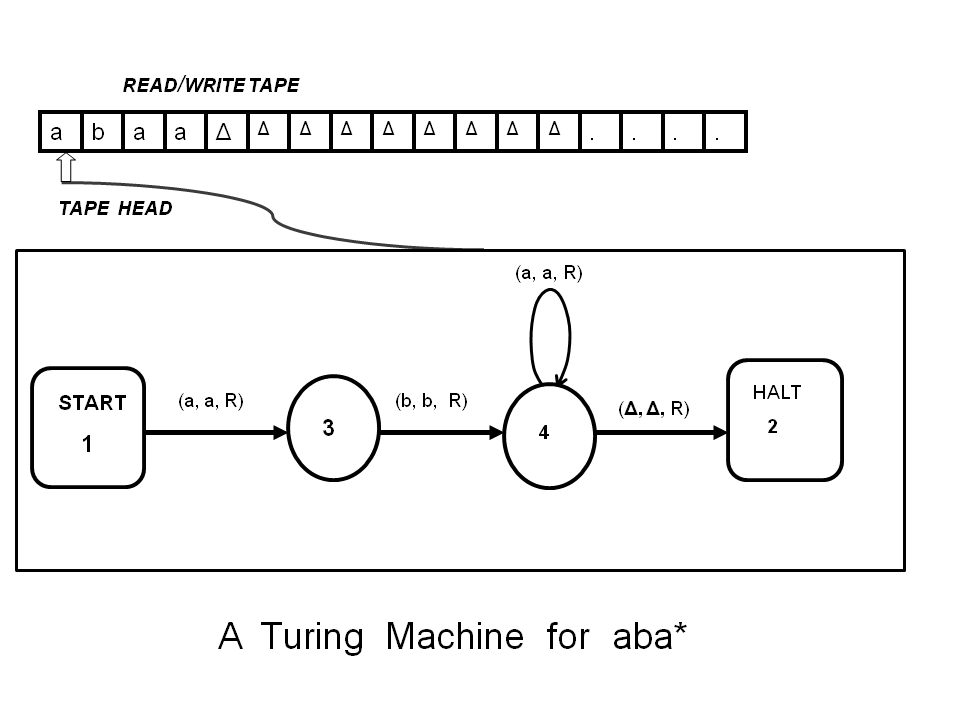

The Turing Machine

Alan Turing made significant strides in the realm of artificial intelligence with his seminal paper "Computer Machinery and Intelligence," where he introduces the Turing test and outlines a functional definition of intelligence. Turing's perspective underscores that if a machine can pass the Turing test by being indistinguishable from a human in a specific manner, then it can be regarded as thinking. He also alludes to the notion of machines learning akin to human babies, starting with minimal knowledge and the capacity for substantial learning, needing nurturing akin to human beings. Additionally, Turing hints at processes simulating evolution, pointing towards the future development of neural networks and genetic algorithms, early concepts that would become integral to artificial intelligence. These forward-thinking ideas were already envisioned by Turing in 1950.

Source: Aesthome.org

2 Paradigms: Connectionism vs. Symbolism

In the 1950s, the development of artificial intelligence saw the emergence of two distinct paradigms. The Dartmouth conference in 1956, attended by prominent figures including Marvin Minsky and Claude Shannon, marked the inception of a program to create machine intelligence, coining the term "artificial intelligence." From this point, two main paradigms, known as the symbolic or logical paradigm and the connectionist or neuronal paradigm, emerged.

Symbolism 101

The symbolic paradigm postulates that reasoning primarily involves the manipulation of symbols according to specific rules, akin to mathematical proofs, where explicit rules dictate the manipulation of symbols to arrive at inferences and theorems. This paradigm aims to embed these rules in computer programs to enable reasoning similar to human logical and mathematical reasoning. An early successful artificial intelligence program from the 1950s, the logic theorist, demonstrated the ability to prove simple arithmetic theorems, hinting at the potential automation of mathematical processes, despite theoretical limitations identified by Gödel and Turing.

Source: Marvin Minsky

Connectionism 101

On the other hand, connectionism, rooted in cybernetics and the study of the brain, extends beyond neurons and posits that interconnected simple components with complex feedback loops can generate intricate behaviors and phenomena. This concept, observable in the brain, social networks, and societal systems like marketplaces and social animal behaviors, eliminates the need to explicitly represent symbols and rules for manipulation. Instead, it asserts that complex reasoning can stem from simpler components interconnected in specific ways, yielding symbolic reasoning implicitly through complex patterns of activation.

1st AI Winter

The evolution of these dual paradigms persisted throughout the development of Artificial Intelligence. The initial phase saw the rise of logical, symbolic systems and neuronal systems, followed by the advent of the first AI winter in the mid 1970s, marked by the inability of perceptrons to perform basic computations and the impracticality of scaling hard-coded rules in symbolic systems, leading to a halt in AI development.

2nd Wave of AI: Revival of Symbolic Paradigm

In this second wave of AI, we are witnessing a revival of the symbolic paradigm, exemplified by the expert systems paradigm. This resurgence is often referred to as the knowledge revolution, where the focus has shifted from coding pure logical reasoning to developing higher-level systems, such as expert systems tailored to specific domains like healthcare and science. This marks the emergence of semantic networks and ontologies, which represent knowledge graphically, with each node symbolizing specific entities like people, diseases, and genes, and the connections between nodes representing semantic relationships.

Ontologies and semantic networks are symbolic models that draw from the connectionist approach, emphasizing the significance of connections and interconnected elements. Unlike neural networks, which do not predetermine the representation of each neuron and prioritize the overall learning process, ontologies and semantic networks explicitly define the meaning of each node and connection in the network.

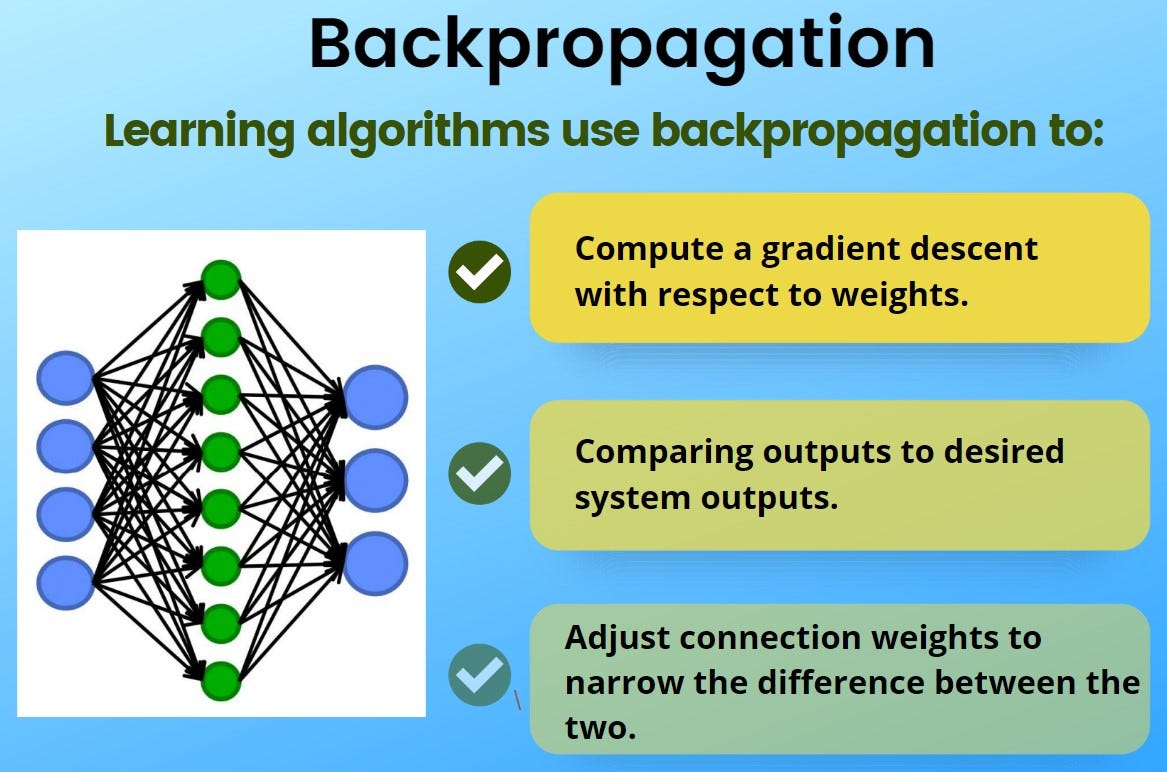

Connectionism on The Rise

Following the first AI winter, there was a revival of connectionism as well, through the advent of neural networks and the development of the backpropagation algorithm, pioneered by Hinton and Rosenblatt. This breakthrough allowed for the training of non-linear neural networks, addressing the fundamental challenge raised by Minsky. Theoretical advancements unveiled that sufficiently wide neural networks with a single hidden layer could approximate any non-linear continuous function, and the addition of a second hidden layer enabled the approximation of any non-linear function, even if discontinuous, with high precision. However, the requirement for a large number of neurons grew disproportionately as the complexity of the function increased, leading to the realization that stacking more hidden layers could introduce greater non-linearity with fewer neurons, offering the insight needed for scalable implementation of neural networks.

Source: Technopedia

2nd AI Winter

In the late 1980s, the second AI winter occurred due to inadequate resources to fulfill the objectives of the big funding program for developing complex AI systems. This resulted in the cessation of funding, largely due to the insufficiency of computing power, manpower, and data to scale and train neural networks. Both paradigms, connectionist and symbolic, failed to meet expectations due to scale limitations.

During the 1990s and 2000s, AI began to permeate various applications such as search engines, signal processing, and image recognition, albeit behind the scenes. Despite these successes, the AI field remained relatively subdued as a result of past unmet high expectations. Specific applications of AI, such as recommendation systems, collaborative filtering, and object recognition, garnered success, and early achievements in natural language processing, particularly in translation, were notable. The absence of grandiose claims about solving all problems characterized this era.

The Deep Learning Revolution

The deep learning revolution began in 2011-2012, marking a pivotal shift when computing power and data reached a level that enabled training of deep neural networks for tasks like image recognition. This revolution led to a significant performance leap in image recognition and prompted a widespread shift towards AI-based methods. Notably, the success of convolutional neural networks at scale reshaped the field of image recognition.

The trend of exploiting scale continues, with recent architectural innovations such as the transformer architecture revolutionizing language processing. This architecture facilitates the extraction of meaning from context beyond simple word or sentence processing. Additionally, techniques like dropout and batch normalization have enhanced the efficiency of training.

Over the past two decades, the critical discovery has been that significant computing power and data scale make neural networks highly effective across numerous tasks. While experts anticipate a ceiling to this improvement, such limits have not been reached, and continued exploitation of scaling benefits is expected for several years.

Source: Finbarr.ca

Triumph of Connectionism?

The triumph of the connectionist approach - exemplified by the widespread success of deep learning - is evident, although the symbolic paradigm remains utilized. For instance, the neurosymbolic model used in Copilot combines deep learning with symbolic models to generate code, demonstrating the ongoing interplay between the two paradigms. Moreover, successful approaches for enhancing language models involve combining neural networks with knowledge-based methods.

The overarching lesson is that neither the connectionist nor symbolic approach is the ultimate truth; their combination leverages their respective strengths, yielding the best outcomes.

Provisional Conclusion

A heartfelt acknowledgment goes to Alejandro Piad Morffis for his invaluable insights throughout this article. As we delve into the depths of our exploration, it becomes increasingly clear that the quest for artificial intelligence is a testament to the dynamic capabilities of human cognition and creativity. This inquiry, marked by intense debates and the challenges of theorizing it through rigorous foundational principles, underscores the significance of the distinction proposed at the beginning of our dialogue: the difference between knowledge generation and information processing.

This historical backdrop emphasizes that intelligence and the creation of knowledge, while facilitated by tools and methodologies, are fundamentally rooted in human connection and collaboration. Ahead of us educators lies the monumental task of creating methods that are effective, engaging, and equitable. The journey promises to be fraught with challenges, yet the extraordinary surge of creativity that has propelled the history of AI—and that now drives its swift evolution—contains the implicit promise that we can meet these challenges head-on. It is this creative force that is poised to undertake the necessary work, paving the way for both the current and next generations of students to thrive in an increasingly AI-integrated world.

Thanks for reading this article! Thank you for being a part of the Educating AI network!

Please share with us your thoughts and insights!

Look out for the 2nd part of this series in the next edition of Educating AI!

Alejandro Piad Morffis and Nick Potkalitsky

Oh man you're way too kind with that introduction 😊

Phew, what a deep-dive.

I'm sure I'll need another readthrough to grasp everything, but even learning about the existence of the two parallel paradigms (connectionist and symbolic) was a new thing to me. I enjoy that this isn't an either/or type of dichotomy and that the field benefits from embracing both paradigms to move forward.

Great to see two smart people collaborate on an ambitious project like this! Looking forward to the coming chapters.