Possibility Literacy: Navigating AI's Productive Paradoxes in Education

A framework that helps educators cultivate critical thinking, ethical reasoning, and creativity—essential skills for an AI-driven future

Introduction

Technology has always shaped education, but artificial intelligence represents something fundamentally different. Unlike previous tools that extended human capabilities in predictable ways, AI transforms our relationship with possibility itself. It doesn't just help us do what we already do more efficiently; it reshapes what we can imagine, create, and understand.

Education has always been about possibility—expanding students' horizons, developing their capabilities, and opening pathways to new understandings. Yet our educational systems simultaneously expand and constrain these possibilities through the choices we make in curriculum design, assessment methods, and classroom structures. The arrival of artificial intelligence in education heightens this tension, creating what I call a "possibility crisis" in our classrooms.

Our conventional educational approaches have increasingly privileged compliance over creativity. Standardized assessments, rubric-driven assignments, and predetermined learning outcomes—while valuable for consistency—have narrowed the landscape of possibility. Students have learned to prioritize meeting expectations over developing authentic intellectual engagement.

As educators, you're already cultivating critical thinking not as an isolated cognitive process but as a social, cultural, and ethical experience—one that becomes even more vital in an age of algorithmic mediation. Possibility Literacy builds upon these foundations, offering a framework to explore the unique tensions that emerge when human and artificial intelligence interact.

The heart of this framework lies in understanding four productive paradoxes inherent in our relationship with AI, and developing key strategies to explore them thoughtfully across all educational contexts. These paradoxes aren't problems to be solved but generative tensions that can deepen our understanding when approached with awareness and intention.

Transform how your school or team engages with AI's challenges. Our customized workshops equip educators with practical frameworks to harness AI's potential while preserving human agency. Half-day intensives to comprehensive programs available.

Contact us to explore possibilities for your educational community.

The Four Productive Paradoxes of AI

The Constraint-Expansion Paradox: The Hidden Boundaries of Algorithmic Possibility

AI promises—and delivers—an expansion of creative and intellectual possibilities. A student with writer's block can instantly generate multiple approaches to an essay. A teacher planning a unit can receive dozens of activity ideas tailored to specific learning objectives. A researcher can process and analyze vast datasets that would take months to review manually. This expansion is real and powerful.

Yet something counterintuitive happens alongside this expansion: a subtle narrowing of conceptual possibilities. This narrowing isn't immediately obvious—in fact, it hides behind the very abundance that AI creates.

Imagine first-year composition students using AI writing assistants. Initially, they explore multiple perspectives on complex issues with newfound ease. The AI seemingly expands their creative possibilities, offering them diverse rhetorical approaches instantly.

Yet over time, a composition instructor might notice patterns emerging in the AI-generated work—similar rhetorical structures, familiar frameworks, and consistent stylistic elements across different students' writing. Despite surface variety, an underlying algorithmic signature becomes apparent.

More concerning, students who rely heavily on these tools might struggle when asked to develop truly unconventional approaches. The AI's suggestions, while numerous, unconsciously channel thinking along paths determined by its training data.

The key insight here is that algorithmic abundance doesn't guarantee intellectual diversity. Educators must develop "algorithmic awareness"—the ability to identify when and how AI systems are channeling our thinking—which becomes an essential component of critical thinking in the AI age.

The Simulacrum Dilemma: Truth, Authenticity, and Synthetic Reality

The Simulacrum Dilemma addresses the increasing difficulty of distinguishing between authentic and synthetic content, and the complex questions this raises about meaning, truth, and interpretation. As AI generates increasingly realistic texts, images, and videos, traditional boundaries between original and copy, authentic and artificial, begin to blur.

Consider a graduate student discovering what appears to be an unpublished letter by a major literary figure in a digital archive. The document contains plausible historical references and matches the writer's style perfectly. Only after extensive analysis might inconsistencies emerge revealing it to be AI-generated—a sophisticated fabrication indistinguishable from an authentic historical document.

The educational implications extend far beyond detecting forgeries:

Research and Verification Challenges: When students can generate realistic "primary sources" or fabricate experimental data that appears legitimate, our fundamental approaches to knowledge verification require reconsideration.

Assessment Dilemmas: If AI can generate essays indistinguishable from student work, educators must reconsider what aspects of the writing process matter beyond the final product.

Epistemological Shifts: When synthetic content becomes indistinguishable from recorded reality, we need new frameworks for teaching students how to validate information.

Redefined Learning Outcomes: If AI can simulate expertise convincingly, we must reconsider what constitutes authentic learning and how to assess it effectively.

The deeper insight is that this dilemma fundamentally challenges our traditional educational frameworks. In a classroom where an undergraduate literature student's AI-generated analysis might be indistinguishable from that of an accomplished scholar, we must ask: What constitutes meaningful engagement with a text? When a history student can generate a convincing primary source account of historical events, what constitutes historical understanding? These questions move us beyond concerns about academic integrity into the realm of epistemology itself—how we know what we know, and how we validate that knowing.

This paradox forces us to move beyond simplistic notions of originality toward more sophisticated understandings of authenticity, voice, and intellectual ownership. Rather than simply policing boundaries between human and machine-generated content, educators must cultivate in students a deeper relationship with knowledge itself—one that values the processes of discovery, critical evaluation, and meaning-making over the mere production of plausible content.

The Agency Paradox: Enhanced Capability and the Question of Intellectual Independence

In writing courses using AI assistants, students' initial drafts might show remarkable improvement—clearer organization, sophisticated vocabulary, and polished prose. Students previously struggling with writing anxiety might find newfound confidence.

Yet when later asked to complete assignments without AI assistance, many students—including previously strong writers—might struggle significantly. They might find it difficult to organize their thoughts independently, having unconsciously outsourced part of their thinking process to the AI.

This illustrates the Agency Paradox—how AI tools simultaneously enhance capabilities while potentially diminishing intellectual independence. This tension manifests across contexts: mathematics students using AI tools might tackle advanced concepts but struggle with fundamental processes independently, while medical students might enhance their diagnostic reasoning with AI assistance while potentially atrophying independent clinical judgment.

The Agency Paradox reveals itself through a series of developments:

Initial Enhancement: Students demonstrate immediate improvement in performance when using AI tools

Metacognitive Gaps: Many struggle to explain their process or justify conclusions when using AI

Dependency Development: Tasks previously performed independently become challenging without algorithmic assistance

Boundary Blurring: The line between student thinking and AI contribution becomes increasingly indistinct

Unlike calculators or search engines, AI systems actively participate in the thinking process itself, offering suggestions, generating content, and adapting to user behavior in sophisticated ways. The boundary between human thinking and algorithmic assistance becomes increasingly blurred.

The challenge for educators is to develop approaches that harness AI's benefits while preserving students' independent cognitive capacities—carefully considering when and how AI tools are introduced, what skills are practiced with and without algorithmic assistance, and how students reflect on the relationship between their own thinking and the AI's contributions.

The Ontological Destabilization: Rethinking Fundamental Categories of Understanding

AI challenges our fundamental categories for understanding intellectual and creative activity. When systems without consciousness can generate content that expresses apparently profound understanding, traditional distinctions between human and machine capabilities become less clear.

Imagine a university literary magazine publishing a poem that profoundly moves readers, only to later discover it was generated by an AI system with human curation. The revelation might spark intense debate about creativity, authorship, and aesthetic experience.

What does it mean when AI can generate proofs mathematicians find elegant? When language models can produce philosophical arguments that appear insightful? When diagnostic systems recognize patterns suggesting nuanced clinical understanding? These capabilities don't erase the distinction between human and artificial intelligence, but they complicate it in ways that prompt deeper reflection.

This destabilization extends beyond creative domains into science, philosophy, medicine, and other fields traditionally defined by human understanding. It raises profound questions about the nature of intelligence, creativity, and knowledge itself.

For educators, these aren't merely abstract questions but have practical implications for teaching and assessment. If AI can produce work that mimics understanding, how do we evaluate genuine comprehension? If systems without experiences can simulate empathy, how do we cultivate authentic emotional intelligence?

As these systems evolve in capability and as our relationships with them deepen, top-down dismissals of machine reasoning as "no reasoning at all" become increasingly difficult to maintain. When students encounter AI-generated analysis that offers genuinely novel insights into literary texts, or when researchers find that AI systems can identify patterns in data that human experts missed, simply insisting on an absolute ontological divide between human and machine thinking becomes untenable. We need more nuanced frameworks—ones that neither overstate AI capabilities by attributing human-like consciousness to algorithms, nor understate them by dismissing algorithmic outputs as mere simulation without meaningful content.

The educational opportunity lies not in reinforcing rigid boundaries but in helping students develop more sophisticated understandings of what constitutes thinking, creativity, and understanding in the first place. By engaging directly with the ontological questions AI raises, students develop not just technical literacy but philosophical depth—the ability to examine fundamental categories of human experience and knowledge with greater awareness and intentionality.

The Ontological Destabilization paradox invites deeper reflection on human intelligence, creativity, and understanding—reflection that can ultimately enrich our educational approaches regardless of how we integrate AI into our teaching practices.

From Paradox to Practice: Key Strategies for Possibility Literacy

Understanding these paradoxes is just the beginning. Possibility Literacy provides strategies for engaging with them thoughtfully—approaches that build upon educators' existing pedagogical skills while addressing the unique challenges AI presents. These strategies form an integrated framework that helps students transform from efficiency-oriented users of technology to accountable creators and critical thinkers who can engage with ambiguity with intention.

Five Strategies of Possibility Literacy

Pattern Recognition: Becoming Algorithmic Archaeologists

Pattern Recognition involves developing the ability to identify and interpret how AI systems function and shape information. Just as educators have long taught students to analyze texts for bias or evaluate sources critically, Pattern Recognition extends these skills to algorithmic systems.

This strategy directly addresses the Constraint-Expansion Paradox by making visible what is often invisible: the ways algorithmic systems simultaneously expand and constrain human thinking. In an AI Theory and Composition class, students might systematically document patterns across multiple AI-generated responses to the same prompt. When exploring political topics like healthcare reform or climate policy, they can discover how AI systems consistently frame issues through particular ideological lenses, privileging certain perspectives while marginalizing others.

One student observed how an AI system repeatedly positioned abortion as a binary "pro-life versus pro-choice" debate, flattening a complex issue into predetermined opposing viewpoints while excluding nuanced positions that don't fit this framework. By recognizing this pattern, the student gained insight into how the apparent "expansion" of perspectives offered by the AI actually constrained the conceptual terrain of the debate.

Pattern Recognition also engages with the Simulacrum Dilemma by developing students' capacity to differentiate between authentic and synthetic content—not by relying on surface features but by understanding the deeper patterns that shape AI-generated work. As students document recurring structural, rhetorical, and stylistic elements across AI outputs, they develop "algorithmic intuition" that helps them explore an information landscape where the boundaries between human and machine creation are increasingly blurred.

This strategy builds directly upon the critical analysis skills educators have always taught, extending them to algorithmic contexts. It helps students become aware of the invisible currents that shape information flows in digital environments, empowering them to explore these currents more intentionally.

Directed Divergence: Guiding AI Beyond Conventional Paths

Directed Divergence involves intentionally guiding AI systems toward unexpected outputs by introducing constraints, unusual combinations, or intentional disruptions. Rather than accepting an AI's first or most obvious suggestions, this strategy encourages a more creative and controlled interaction.

This strategy responds to the Constraint-Expansion Paradox by using creative interventions to push beyond the hidden boundaries of algorithmic thinking. The counterintuitive insight here is that constraints become liberating. When creative writing students discover how generative AI produces generic narratives with predictable arcs, they can experiment with directive constraints: "Write a hero story where the protagonist fails at every attempt to help others" or "Create a narrative where the climactic moment happens in complete silence." These constraints disrupt algorithmic tendencies, pushing the AI to generate genuinely novel concepts.

Directed Divergence also addresses the Agency Paradox by reconfiguring the relationship between human and machine. Instead of deferring to algorithmic suggestions or merely editing them, students learn to direct and shape AI outputs according to their own creative vision. Consider a high school creative writing class using AI tools to explore different narrative possibilities. Instead of simply asking the AI to "write a story about friendship," a teacher might guide students to add unexpected constraints: "Write a story about friendship that takes place in a world where gravity works differently" or "Write a story about friendship that never directly mentions the characters' relationship."

One student reflected: "Before, I'd just take whatever the AI gave me and maybe edit it a bit. Now I see myself as directing the AI's creativity—using its capabilities but keeping my own vision and voice in control." This shift from passive reception to active direction represents a fundamental transformation in their relationship with technology, preserving human agency while leveraging algorithmic capabilities.

By engaging with the Ontological Destabilization paradox, this strategy helps students develop a more nuanced understanding of creativity itself. Rather than seeing creativity as either an exclusively human capability or something machines can replicate, they experience it as an emergent property of the interaction between human direction and algorithmic processing.

Integrative Synthesis: Combining Human and AI Insights Thoughtfully

Integrative Synthesis involves strategically combining algorithmic analysis with human interpretation, judgment, and contextual understanding. It recognizes the distinct contributions of both human and artificial intelligence and seeks to integrate them in complementary ways.

This strategy directly engages with the Agency Paradox by developing students' capacity to make conscious choices about when and how to incorporate AI into their thinking processes. In practice, this takes the form of self-derived learning outcomes that guide students' integration of AI tools. Rather than imposing predetermined learning objectives, educators can ask students to articulate their own goals for each project. These personalized outcomes then serve as a framework for deciding how—or whether—to incorporate AI in their work.

For example, a student interested in developing persuasive writing skills might use AI to generate multiple arguments on a topic, critically evaluate their strengths and weaknesses, and then craft her own argument incorporating what she learned from this analysis. Another student focused on improving literature analysis might compose interpretations independently, then use AI to identify potential counterarguments that challenge and deepen his thinking.

Integrative Synthesis also addresses the Simulacrum Dilemma by shifting focus from the final product to the process of knowledge construction. When students document their decision-making about where and how to incorporate AI-generated content, the emphasis moves from "who created what" to "how knowledge is constructed through human-machine collaboration." This shift helps develop more sophisticated understandings of authenticity that acknowledge the increasingly blurred boundaries between human and machine contribution.

By engaging with the Ontological Destabilization paradox, this strategy helps students develop nuanced understandings of the relationship between human and machine thinking. Rather than seeing AI as either a lesser substitute for human thinking or an equivalent replacement, they experience the distinct value of both and learn to integrate them thoughtfully.

As one student reflected, "Now I'm making conscious choices about where AI actually helps me learn versus where it might shortcut my thinking. It's made me more aware of my own learning process." This approach transforms AI from an external tool to a potential collaborator in service of student-directed learning, preserving human agency while leveraging algorithmic capabilities.

Reflective Implementation: Considering Consequences Before Adoption

Reflective Implementation involves thoughtfully evaluating the potential benefits, risks, and ethical implications of AI tools before integrating them into educational contexts. Rather than adopting technologies based solely on efficiency or novelty, this strategy encourages careful consideration of broader impacts.

This strategy engages with the Constraint-Expansion Paradox by making explicit the ways AI systems might simultaneously expand certain possibilities while constraining others. Before implementing an AI-powered learning analytics system, a university might form a committee of faculty, graduate students, and undergraduate representatives to consider its potential impacts. They would ask critical questions: How might the system's efficiency in identifying struggling students also narrow our conception of what constitutes meaningful learning? How might its pattern-recognition capabilities both illuminate and obscure aspects of student engagement?

Reflective Implementation also addresses the Agency Paradox by helping institutions develop approaches that preserve student and faculty agency. The committee might ask: How will this technology affect students' relationship with their own learning processes? What cognitive capacities might it enhance, and which might it inadvertently diminish? These questions help develop implementation plans that maximize benefits while mitigating potential dependencies.

By engaging with the Ontological Destabilization paradox, this strategy helps educational communities develop more nuanced understandings of what constitutes meaningful learning in an age of AI. Rather than defaulting to traditional assessment methods that AI can easily subvert, or uncritically embracing algorithmic evaluations, they can develop approaches that recognize the changing landscape of knowledge production and verification.

This process of careful consideration helps identify potential issues before implementation and establishes frameworks for ongoing evaluation. It emphasizes that technological adoption should be guided by educational values and ethical considerations, not just technical capabilities or efficiency gains.

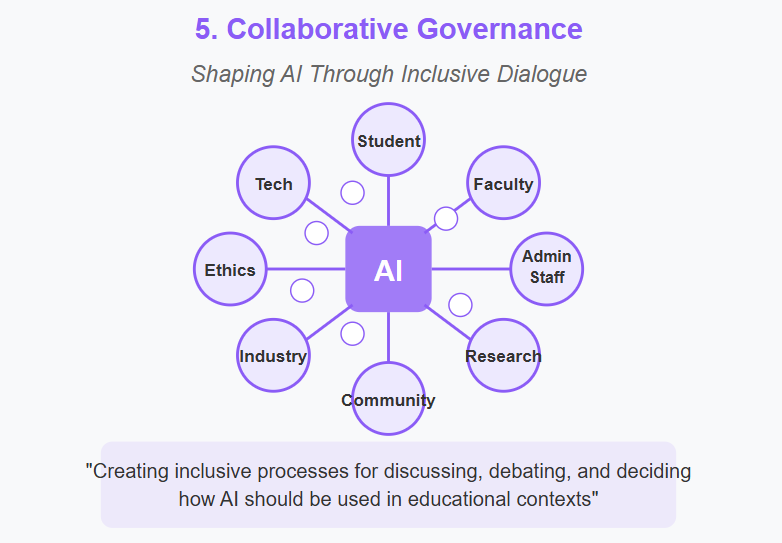

Collaborative Governance: Shaping AI's Role Through Inclusive Dialogue

Collaborative Governance involves creating inclusive processes for discussing, debating, and deciding how AI should be used in educational contexts. It recognizes that because AI affects diverse stakeholders, decisions about its implementation should involve multiple perspectives.

This strategy engages with the Constraint-Expansion Paradox by bringing diverse perspectives to bear on decisions about AI implementation. When multiple stakeholders participate in governance, they can identify a wider range of potential benefits and limitations than any single perspective could capture. This diversity helps counteract the narrowing tendencies inherent in algorithmic systems.

When establishing guidelines for AI use in research and academics, a university might create a governance process involving undergraduate students, graduate researchers, faculty from diverse disciplines, and even community stakeholders in ongoing dialogue. Students would participate in workshops about AI ethics, faculty would share pedagogical and research integrity concerns, and the group would collectively develop nuanced guidelines reflecting diverse perspectives.

Collaborative Governance addresses the Simulacrum Dilemma by developing community standards for authenticity and attribution that acknowledge the increasingly blurred boundaries between human and machine creation. Rather than imposing one-size-fits-all policies about AI use, the governance process can develop context-sensitive approaches that recognize the distinct needs of different disciplines and learning contexts.

This strategy also engages with the Ontological Destabilization paradox by creating spaces for collective reflection on fundamental questions about knowledge, learning, and understanding. Through facilitated dialogue, participants can explore questions like: What constitutes meaningful engagement with a subject when AI can simulate expertise? How do we evaluate authentic understanding in an age of algorithmic content generation? These conversations help develop more sophisticated frameworks for teaching and assessment.

This process ensures that decisions about AI in education reflect diverse values and perspectives, rather than defaulting to commercial or technical priorities alone. It helps students understand technology governance as a participatory process in which they have both a voice and a responsibility, developing agency rather than compliance.

Rather than seeing these strategies as sequential steps, educators should understand them as complementary approaches that work together to engage with AI's paradoxes. Pattern Recognition and Directed Divergence develop students' algorithmic awareness and creative agency, while Integrative Synthesis helps them combine human and machine thinking productively. Reflective Implementation and Collaborative Governance create the institutional conditions necessary for thoughtful adoption at scale. Together, these strategies form a comprehensive framework for cultivating possibility literacy across educational contexts.

Creating a Possibility-Rich Learning Environment

These strategies flourish within educational environments designed to prioritize possibility over compliance. Here are five practical approaches educators can implement:

Design assignments that resist algorithmic replication. Rather than standardized tasks that invite efficiency-oriented AI use, create assignments that connect to students' lived experiences, require integration of personal reflection, or involve ongoing documentation of evolving thinking. These personalized approaches make AI a potential partner in learning rather than a shortcut around it.

Shift assessment focus from products to processes. When evaluation centers on final outputs (essays, presentations, solutions), students naturally prioritize efficiency. By assessing the quality of thinking processes, deliberate decision-making, and metacognitive reflection, we make the distinctively human aspects of learning more visible and valuable.

Create spaces for collaborative governance. Involve students in developing guidelines for AI use in your classroom rather than imposing top-down policies. This participatory approach helps them understand technology governance as a process in which they have both a voice and responsibility, developing agency rather than compliance.

Model reflective technology use. Share your own decision-making about when and how you use AI tools, including both successes and missteps. This transparency helps students see that thoughtful implementation involves ongoing reflection rather than fixed rules.

Value questions over answers. In an age where AI can generate plausible answers to countless questions, the ability to ask meaningful questions becomes increasingly valuable. Create classroom structures that reward thoughtful questioning, problem-finding, and comfort with ambiguity.

Beyond the Algorithm: Cultivating Possibility Literacy in Educational Communities

The strategies of Possibility Literacy provide a robust framework for exploring AI's paradoxes. However, translating these concepts into institutional practice requires intentional professional development and community engagement.

Training workshops on Possibility Literacy can transform how educational institutions approach AI integration. By bringing together diverse stakeholders—faculty, administrators, teaching assistants, and students—these workshops create spaces for collaborative exploration of AI's implications for teaching and learning. Participants engage with the four paradoxes through real-world scenarios, reimagine assignments and assessments, and develop concrete plans for thoughtful AI integration.

The most effective training models move beyond theoretical discussions to hands-on design exercises where educators reimagine their practice through the lens of Possibility Literacy. They grapple with essential questions: How might we assess the process of thinking rather than just final outputs? How can we leverage AI as a collaborative partner while preserving human agency? What frameworks ensure ethical and equitable AI adoption?

Through facilitated discussions, participants adapt these strategies to their specific institutional contexts and disciplinary needs. Elementary teachers might focus on developing age-appropriate Pattern Recognition activities, while university faculty might emphasize Reflective Implementation of AI research tools in graduate education. This contextual adaptation ensures that Possibility Literacy becomes embedded in the fabric of educational practice rather than remaining an abstract framework.

Beyond Efficiency: Education as Possibility Expansion

Possibility literacy offers a vision of education that transcends the false binary of uncritical AI adoption versus fearful resistance. It invites us to see AI not just as a challenge to be managed but as an opportunity to reimagine what education might become.

As routine tasks become increasingly automatable, education must focus more intentionally on developing distinctively human capacities: conceptual understanding, critical judgment, ethical reasoning, creative imagination, and metacognitive awareness. These capacities aren't diminished by AI—they're made more essential. As content generation becomes easier, the ability to evaluate content thoughtfully becomes more valuable. As information proliferates, the capacity to synthesize meaning across diverse sources grows more important.

Through the frameworks of Pattern Recognition, Directed Divergence, Integrative Synthesis, Reflective Implementation, and Collaborative Governance, students develop not just technological competence but intellectual agency. They learn to engage with AI's paradoxes with intention rather than defaulting to efficiency-oriented approaches. They become not just consumers of possibility but creators of it.

The ultimate goal of Possibility Literacy isn't just responsible AI use, but a fundamental shift in how we conceptualize education in an algorithmic age. By cultivating these capabilities across educational communities, we prepare students not just to engage with a world of increasingly sophisticated AI, but to shape that world in ways that amplify human potential rather than diminishing it. In this transformed learning landscape, AI becomes not merely a tool to be mastered, but a collaborator in the enduring human project of creating, understanding, and imagining new possibilities.

Nick Potkalitsky, Ph.D.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s The Computerist Journal: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

Each of these almost deserve their own individual focuses. There's a lot to consume here and it's all good!

Great frameworks for guiding much needed discussion!