Speedboats, Motorboats, and Tugboats: Navigating AI Integration in the English Classroom

A Reflection on a Year-Long Adventure in AI Integration and Implementation

If you find value in our work and want to help shape the future of AI education and awareness, consider becoming a paid subscriber today. To make it easy, we’re offering a forever 20% discount through this special link:

Your support directly helps us broaden our reach and deepen our impact. Let’s build this together.

I have just concluded a major milestone in my school calendar, and I thought I would use this as an opportunity to reflect on what I have learned from my students this year—in particular, watching them heavily experiment with different kinds of AI use in the context of a research-intensive senior English class at a private, college prep high school.

Partnering with AI Tool Providers

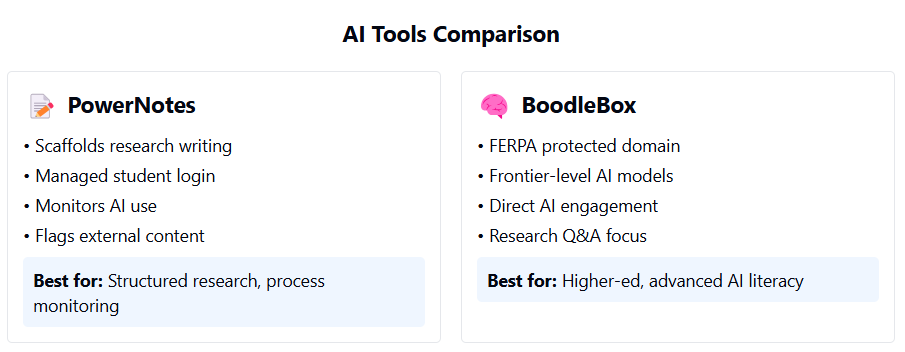

First off, I advocate that safe, equitable, and effective AI integration and implementation is impossible without a good partnership with AI tool providers. Choosing your tool provider should be a process you and your teaching team engage in thoughtfully and methodologically. After careful consideration—knowing that we didn't want students to rely on non-FERPA-protected corporate Frontier models—we worked closely with two tool providers: PowerNotes and BoodleBox.

My school is not yet ready to fully commit to a campus or even a division-wide package. My courses serve as a pilot context for these two very different tools. PowerNotes offers a user space that scaffolds the research writing process, relies on student log-in managed by school and teacher, allows teachers to embed AI access inside different assignments and workspaces, and offers teachers the ability to monitor AI use. If schools choose to use PowerNotes as an LMS, the user space becomes a closed writing arena where any externally-generated text gets flagged and any internally-generated AI text gets designated as such. As such, PowerNotes potentially makes process-monitoring unnecessary.

First Semester: Controlled AI Integration

In the first half of the school year, my senior students engaged in the difficult work of analyzing the existing literature on a selective topic of interest and opening up a space for novel engagement with that topic. In years past, this work has followed the predictable pattern of annotated bibliography, project proposal (research question + fieldwork idea) and literature review. This year, we brought AI out of the shadow and made its processing a shared experience in our research process. An important reason why this is possible is because students on my campus know that I know more about AI than they do. They know I am excited about its potentialities. I am mindful of the risks. They see in my policies that I am not a one-instance-of-AI-use-and-you-fail-the-assignment kind of guy. They have also seen me methodologically set up my class early on in a way where I honor revision, I honor student voice, I honor process over product. With students and AI, classroom vibes are as important as explicit policy and practice.

In this context, we attempted to use BoodleBox for a number of different tasks. I first began by creating pre-set prompts for students to load into the application's AI chat window to accomplish particular tasks. For instance, I taught students how to build a specialized research database by uploading several articles into a chat dialogue, and then gave student freedom to improvise different kinds of experiences—debates, research investigations, gap identification, literacy analysis drafting. The students then evaluated these different experiences in light of the emerging arc of their project. BoodleBox's embedded AI is a little stodgy. It really is designed as a Research Q and A about sources students are reading while doing research and writing. In a sense, we were working with the tool beyond its UX and design principles. The students found it to be quite frustrating, and I noticed a few students pushed some content quickly through a frontier model when I "wasn't" looking. Importantly, I had already given them the speech about turning off the data sharing modality on all their frontier model logins.

The Three User Categories: Speedboats, Motorboats, and Tugboats

At this point in the semester, student workcycles fell more in the three easily identifiable categories that we see so often when running initial AI training sessions: the speedboats, motorboats, and the tugboats. The very few speedboat super-users blasted through these assignments, facilitated amazing experiences, and created incredible products, emerging from these workcycles refreshed and with real sense of accomplishment. The majority motorboat average-users took a little bit of time getting their boats up and running, but once the workflow became discernible and somewhat satisfying results emerged, they walked away from the process fairly convinced of the efficacy of AI-human interaction, although not entirely. The small group of tugboat users, either suspicious of AI or unable to instrumentalize its mathematical patterns, worked very slowly through the assigned process, rarely having a satisfying outcome that encouraged persisting with engagement in the future.

This experience was very thought-provoking for me as facilitator of learning. In part, we struggled with technological design; in part, we found ourselves confronted with gaps and opportunities arising primarily from previous experiences with technology. Some of our students bring with them a high level of confidence and a panoply of skills when it comes to new and emerging technology. They have access to these tools, and are not afraid to explore them for both practical and pedagogical gains. Other students—and I confess a sympathy with this group out of a long-term resistance of technological change that marked my early teaching career—have less access or feel less empowered to take advantage and investigate new technologies, and thus when asked to "experiment" or "improvise," find such experience to re-double their incipient doubts about their place in this AI-infused present.

Second Semester: Deepening AI Engagement

In the second semester, student research and our AI use entered its second phase of development. Again, students were allowed to use AI in a fairly unrestricted manner. I occasionally specified that certain pieces of writing need to be completed by hand or by human-only. But very rarely. One of my keys for success—if we are measuring success in terms of students continuing to write a good deal without very little assistance from AI—is asking students to do the majority of writing in class. At my school, I teach in the block schedule and so have extended periods with my students every other day. I use these to my advantage. Students don't feel as much in a rush to complete assignments outside of class. I emphasize the first-draft quality of most writing. If I want a more polished piece of writing, I give more time for its completion in class.

At this point in the year, students are deeply engaged in their fieldwork, testing hypotheses about their selected topic. During the fieldwork process, we onboarded with a new AI tool, BoodleBox, which assumes a certain level of AI literacy, offering students access to a range of frontier-level AI models in a FERPA protected domain. I love working with the people at BoodleBox. Their product cuts out the middle-man scaffolding of preset prompt buttons of Khanmigo, SchoolAI, and Magic School, offering students the ability to engage more directly and creatively with AI. A teacher manages an account or workspace, and students have sub-logins within these accounts or workspaces. I have a feeling that as currently configured BoodleBox will be a preferable tool for higher-ed, but I am hopeful to see how this company might revamp its space to offer a little more intentional landscaping or shaping to make it an even better fit for the 9-12 space.

Ethical Research and AI-Enhanced Protocols

In years past, the taxing nature of document generation during the fieldwork process has limited previous teachers' ability to build in a fully responsive ethical research protocol. Voluntary participation was just assumed. Ethical considerations about a study-in-progress were understood but understated. But with the advent of AI and my students' immersion in the BoodleBox, a much fuller commitment to research protocols became possible for the senior capstone. At this moment, more students started to transition from motorboats to speedboats. As students considered the various requirements of ethically framed participation forms, surveys, interviews, etc, they became more engaged with AI output at the sentential level. Under the pressure of a real-world challenge, AI experimentation gave way to purposive workcycles focused simultaneously on efficiency and accountability to a larger objective. Part of me thinks that the drive to push efficiency out of the AI writing cycle is misguided. For the skilled users, efficiency and accountability function in a feedback loop serving a goal.

Reflecting on this experience, I am realizing how right Terry Underwood is when he insists on our wholesale reembrace of authentic writing as a powerful pedagogy strategy in an AI age. If this seems far fetched for my reader, start by assigning an I-centered narrative that focused on a very specific kind of knowledge that only the student could possibly possess: memories about a first filmic experience, etc. Or collaborate with another teacher or instructor to create a research that serves an immediate need of the school or campus. Amplify the moment, pressing needs. Engage students with one another around the work of a shared challenge. A pressing need does not function as a preventative against efficiency-oriented AI use, rather—if students are so inclined to use AI—it focuses efficiency-oriented use hopefully on something so specific and granular that human agency remains an essential part of the process moving forward. Here arises the skilled orchestration of the teacher-facilitation in terms of classroom culture—think vibes—and assessment expectations that call either for particular kinds of documented engagements with AI or specific kinds of outputs that involve meta-cognitive and critical thinking that exist productively inside the uncanny valley.

Final Stage: Going Full Cyborg

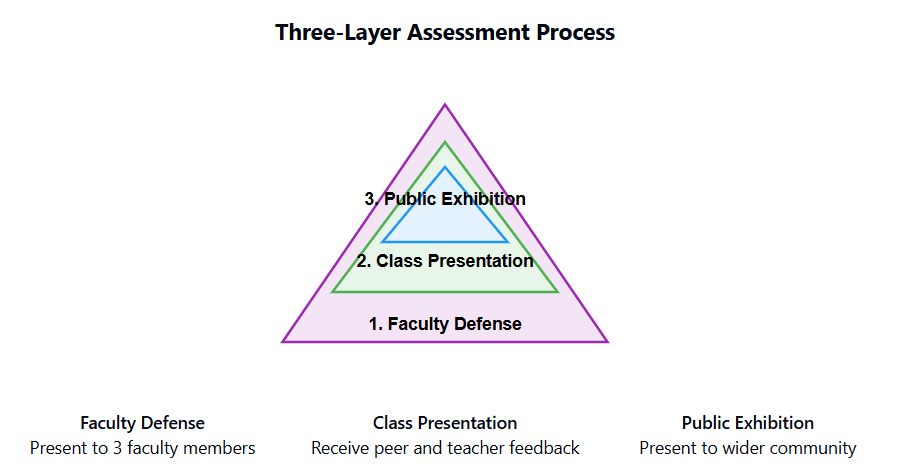

My students are now in the process of completing the third stage of their research involving oral presentation of findings to a defense committee, a class of peers, and a public presentation to the community at large, and the composition of a final project—creative, analytical, and reflective—guided by individually determined learning outcomes. This stage is the site of the third, and perhaps, most unpredictable phase of their AI development. I am still not sure what is happening and how to describe it best, but I will take a shot at an initial description in this article. In the words of Ethan Mollick, my class has very much gone into full cyborg mode. All sorts of unique and interesting things are happening inside student workcycles. It is genuinely hard to tell sometimes where AI generation stops and human composition begins.

At this point in the semester, students have built up an amazingly in-depth cache of largely human-composed texts analyzing different aspects of their literature, fieldwork process, engagement with primary readers and outside scholars, etc. I have run a few workshops in class to show how powerful pre-existing materials become when input and skillfully engaged with inside an AI model. As part of this work, we have spent a lot of time watching the way AI models distort quotations and rush to see patterns in complex data sets. That said, my students' final projects—particularly those composed by my speedboat users—are demonstrating an amazing capacity to engage with AI—not as text generator—but as research assistant. Here, the key to success—if we define it as centering human thought and agency in the midst of automated analytical processes—is to encourage students to incorporate learning outcomes that involve commitments to develop a protocol for utilizing AI as a research tool—reading all the data first, sketching out initial thoughts, observing initial patterns, running different AI reports, analyzing reports closely in light of initial observations, coming to final conclusions. In future iterations, I would love to scaffold this process more, but the early results are quite promising.

In the meanwhile, my motorboat and tugboat students continue to carry out their research along more traditional pathways. The final products are not as polished or as expansive, but the analytical core and agency that we wish to be present in all large project work is readily apparent. These students have set a path for themselves in their learning outcomes and feel satisfied at having pushed themselves to their limits in a very compressed time schedule.

Assessment Through Oral Presentations

You may be thinking at this point—how naive is this guy, none of these kids are actually doing any of the work? But before you go any further, hypothetical resistant reader, let me describe to you the third leg of my assessment process: 3 layers of oral presentation. First, students gave a presentation of their findings to a group of 3 faculty—I sat in on about 10 of the 41 of these defenses due to lack of teacher/staff availability. In many cases these presentations were quite rough. During these presentations, we really got to see where the real thinking was taking place—not in a definitive way—but it was evident in some instances that overreliance took place—or lack of engagement. Honestly, it is really hard to distinguish one from the other these days.

The key to success in this phase—defined as pushing students to claim their own agency in the face of vast temptations to over-rely on automated processes—is to deeply engage with what students bring to the table. If you let them strike and don't give them input on their swing, they will just continue to do the same thing over and over again. You will communicate that you don't care—that what they are doing doesn't matter. Disengage or over-rely. Whatever. But here is where good teaching trumps overreliance 9 out of 10 times. Students take notice when you are engaged with their work and thought process. And knowing that they eventually need to stand in front of a public audience in an expert opinion creates a crucible function around intellectual slippage.

Now the real magic started to happen during the second round—students' graded presentations. At this point, they presented to their class and me in order to receive an additional round of input on their presentation-in-progress. At this point, my commentary became even more focused on analytical process, source citation, and overall rhetorical impact. Implicitly, I am helping students clear a path for resolution of their commitments to their final project—in the throes of a complex large project, individual assignments do not function in a linear path toward a goal, but rather spiral into a feedback nexus, mutually reinforcing and refining each other, not around a final product, but around an evolving thought process. Ideally, what we hope to see happen, and what was evident—much more so than in any of their written products—was an intellectual reaching past the concrete known elements of an inquiry into a space as of yet unresolved. You push and push—AI creates efficiencies of flow and amplifies engagement—individual choice creates unpredictable pathways towards very specific goals. The teacher-facilitator observes more than engages. And by the end of the work primarily serves as audience member—learning from the student.

Now admittedly, a small subset of my students only went through the motions. They were resistant to feedback throughout the process. And thus, they only caught a glimpse of the idealized process I just described. But it is my conviction that to be part of a community where such engagement is possible is in itself a thing of immense value. And that these students can continue to build on their modest gains as they develop courage and experience in their future endeavors.

Grading Challenges and Final Thoughts

Ultimately, grading such an event and experience is quite hard. If I am being honest, I give a lot of completion credit. For instance, everyone received full mastery outcomes on their final graded presentation. The point was to complete the process and get the feedback. I am not sure how I will grade the final projects. The students designed their individual outcomes, but even there, some students excelled—even after all of them received two or three rounds of detailed feedback—in capturing an essential, measurable skill in precise language.

As of right now, I am awaiting to see the final presentation of one student who unfortunately could not attend on the evening of capstone night.

Lots to ponder. Not much time to figure it all out. Final grades are calling.

Nick Potkalitsky, Ph.D.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.