The Adaptation Trap: What Our Internet Past Tells Us About Our AI Future

You know a change has happened when you no longer notice the change.

The magnitude of a change cannot be really understood until that magnitude is no longer noticeable—or appreciated. I first experienced this phenomenon in the area of technology transformation in the early 2000s. I had just graduated from college and moved to the East Bay. When I secured an apartment on 6th St. in Berkeley, my friends and I effortlessly, without thinking, set up our internet connection alongside our other utilities.

Photo by Kelly Sullivan

Thank you for your continued support of Educating AI!!! Please consider becoming a paid subscriber.

We offer group discounts if you are looking for a great way to upskill your department or school!!! DM me!!!

Take advantage of the yearly rate discount. $50 at year = $4.16 per month.

The internet was just becoming mainstream when I was at Oberlin College in the late 1990s. On the college tour, the tour guide boasted that all dorms were "connected." We students dreamed of cyber freedom apart from the watchful gaze of our parents.

Photo from Oberlin Dorms Guide

And yet, when I went home from college, I didn't rely on the internet to shop. I definitely didn't stay in contact with friends via the internet or its first inklings of social media. The phone—and dare I say letters—were still my primary method of communication. In this rather extended analogy, the change in process was just that: still very much in process.

But by the time my friends and I moved into our Berkeley dump, the fix was in. The internet had become a primary means of cultural connectivity, marketing and economics, news and media, research, and in particular everyday communication via email, instant message, and the burgeoning social media.

Image from Old Internet Junk

If I'm remembering correctly, by that time the shockwave weirdness of my first few encounters with internet chat spaces had long faded. Instead, there opened up a space where my friends and I—when not just existing in the normalcy of this radically altered cultural landscape—began to have deep conversations about the meaning of it all. As I pursued my several graduate degrees in the late 00s and throughout the 10s, these conversations came into clearer focus.

Or more accurately: fractalize into dispensations depending upon the context of the user/analyst. Here the label is strategic, for in the wake of major technological changes we are all users/analysts/critics. We are all implicated in the change. No one really can judge from the stance of true non-use or non-integration. Things are just too networked. Ultimately, our free choices themselves are similarly too networked.

If you have ever experienced a graduate program in the humanities, you know that critical dispensations multiply like flies on a dying carcass. But underneath their myriad swarm, conversations tended to focus on the internet as a system of capital, as formation of power, as tool for shaping and negating identity, as post-humanist linguistic artifact, as a simulation of experience, and as rhetorical situation or system.

Each explanation taps into something vital and important about the internet and the culture that has exploded from its manifold layers of experience, and yet even collectively they miss something crucial about the lived reality of relying on internet from something so small as checking a sports score or as magnitudinous as a down payment on a future home. The internet, whether we like it or not, is more than a tool. It has become an extension of the human corpus.

The magnitude of a change can only be really understood after it has become normalized.

Stepping back from this observation, you might have never thought that human beings would get used to a hyperobject like the internet—here using Timothy Morton's term meaning an entity that exists on a multi-dimensional plane operating in unrecognizable or uncertain systems of agencies—could have ever become a normalized thought.

And yet it has.

And this brings us to AI.

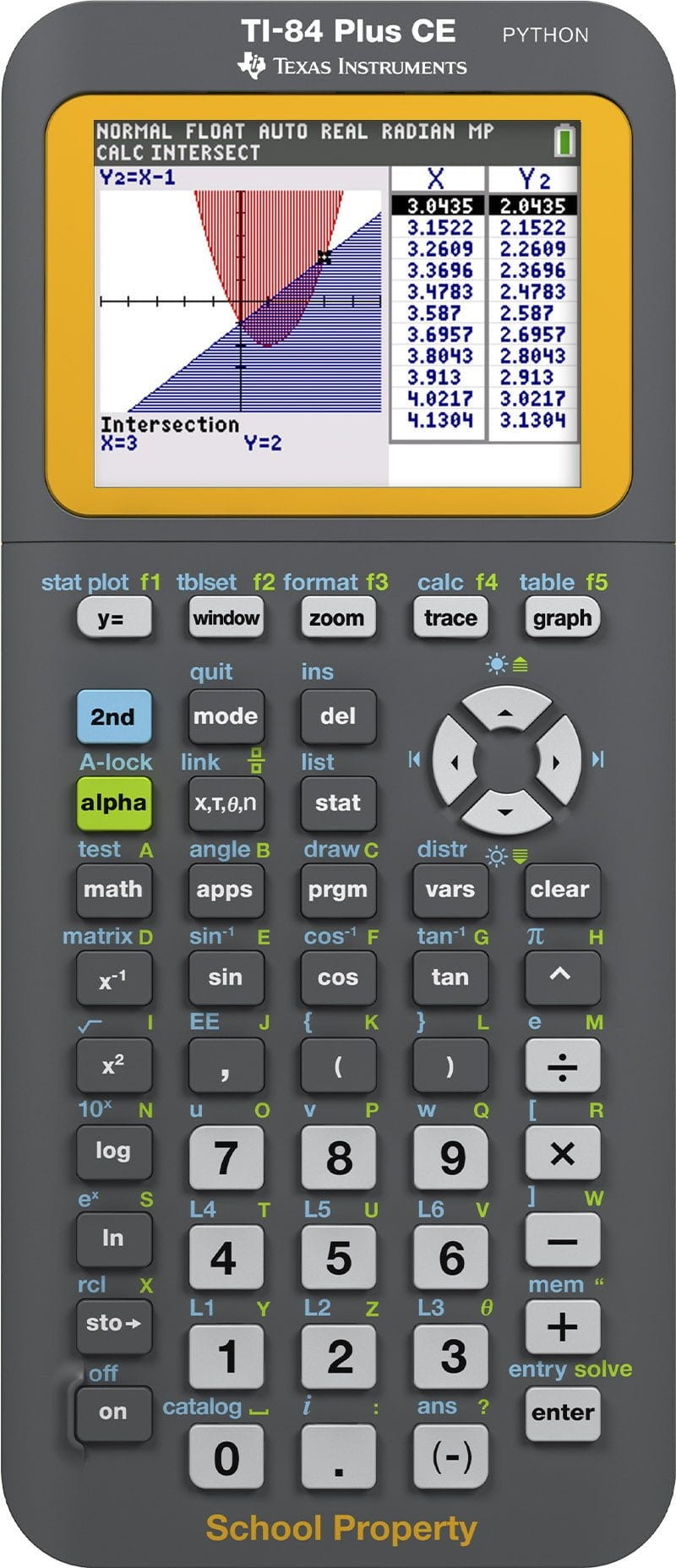

We educators first tried to grapple with the AI disruption through the analogy of an earlier technology: the calculator. In its most popular version, AI was compared to the calculator—a tool that helped students with the grunt work of mathematics in order to open up vistas for higher-order thinking. In the blogosphere, writers like myself debated the relative merits of this analogy, eventually moving on to other topics as the models advanced and improved.

Ironic Midjourney Image of Calculator with Incorrect Numerals

I think if I were to revisit this particular analogy, I would liken AI to something more like a graphing calculator. The initial versions of the analogy—where the calculator was a tool of efficiency that assisted with basic mathematical and algebraic functions—is out of sync with the reality of AI tools and is thus a false analogy.

In reality, as we are learning anecdotally and in the first round of research studies, AI when properly integrated extends thinking about the core skills and competencies of the writing processes and opens up synergistic spaces—as is the case when a student is deeply immersed in the tool-being of a graphing calculator—for true collaboration between AI applications and human resources.

So I have noticed recently that the internet is increasingly becoming the new metaphor of choice for anyone in search of an analogy to explain the complexity of AI disruption. I for one find it a fascinating choice, having lived through the analogous situation in question. I also find it interesting that this analogy is becoming popular just at the time when the initial shock of AI's disruption is subsiding.

You know a change has happened when you no longer notice the change.

This analogy is one that people are using now in the period that my friends back in Berkeley entered way back in the early 2000s, a space in which the beginning of understanding of the magnitude of a change was just becoming possible.

In a way, the AI as internet analogy is an analogy of stability. Indeed, the majority of people who use this analogy intend it to assuage worries about the short-term threats and concerns we should have about AI as a consumer product, cultural force, and educational tool. According to the implicit narrative, we eventually acclimated to the internet, so we will with AI.

What this convenient narrative leaves out is identity theft, the collapse of small and local businesses, the rise of social media, the increasing quantification of personal life, and so on.

But analogies are also selective and have to be in order to function as analogies.

From Analogies in Scientific Thinking

The thing about analogies is that they work until they don't, and they stop working precisely at the moment when we most need them to make sense of things. When we say AI is like the internet, we're really saying: don't worry, we've been here before.

But maybe that's exactly why we should worry. Because the internet didn't just change what we do—it changed who we are, how we think, how we love, how we remember.

My friends and I in that Berkeley apartment couldn't have imagined Instagram, or Twitter, or the way smartphones would become extensions of our bodies. We couldn't have predicted the way the internet would reshape not just our tools but our minds.

You know a change has happened when you no longer notice the change.

But there's something deeper here: you know a change has happened when you start using it to explain other changes, when it becomes the lens through which you understand all other transformations.

The internet analogy for AI isn't just an analogy—it's a confession of how thoroughly we've been transformed, how completely we've forgotten what it meant to be human before. And maybe that's what we should really be talking about when we talk about AI: not whether it will change us, but whether we'll maintain enough of our pre-AI selves to even recognize the transformation after it has taken place.

Nick Potkalitsky, Ph.D.

Transform Your School's AI Culture

Immersive. Collaborative. Reflective.

Find our courses here: https://pragmaticaicommunity.sutra.co

Two Pathways to AI Excellence:

Foundational AI for Educators

Essential classroom tools

Immediate application

Advanced AI Differentiation

Master personalized instruction

Lead your school's transformation

School Package: Enroll your team, get a free AI workshop

Free strategy sessions & implementation support available.

Transform your school's AI readiness: nicolas@pragmaticaisolutions.net

Check out some of my favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

Good post. I wonder how much is a matter of “forgetting”, versus the inevitable cultural change of the shifting in generational experience? I’m about the same age as you, so my “post-internet” life is now eclipsing the duration of my “pre-internet” experience. For generations after us, they haven’t even known a pre-internet life to forget.

My children are growing up in a world where you can ask AI any question at any time and get an immediate answer. How will this change shape their minds?

As potent as AI is, I’m not sure it will cause the same social change as the (mobile) internet. AI collapses the long standing human process of seeking information into an immediate request/response. But connecting a human to every human in the world, at all times, and with an emphasis on consumption… feels more disruptive to human nature.

I guess we will see 😬