The Hidden Curriculum of AI Interactive Spaces

We really need a systemic approach for the introduction of AI interactive spaces grounded in AI literacy frameworks and standards!

If you find this content valuable and shareable, please consider becoming a paid subscriber to support the deep research and nuanced analysis these complex issues deserve.

You've heard it in faculty meetings, at parent-teacher conferences, and in hurried conversations between classes: "Our kids are living on ChatGPT." "The district just bought MagicSchool for every teacher." "My students say AI explains math better than I do." Many schools are now rushing to deploy AI interactive spaces, and most haven't stopped to ask what students are actually learning from these seamless conversational experiences.

School districts across the country face a critical decision this year: whether to activate AI interactive spaces for students. Platforms like MagicSchool and SchoolAI promise compelling solutions. Pre-built subject bots, customizable learning environments with multimodal capabilities, and conversational experiences that feel like having a knowledgeable tutor available 24/7.

But behind the sleek interfaces lies a more complex reality. These tools teach students lessons no one intended.

It's Not Actually Like Google

Most educators approach AI with a familiar framework. "It's just a new way to search for information." This comparison feels intuitive. Students ask questions, they get answers. Done.

The comparison breaks down under scrutiny.

When students Google the causes of World War I, they see multiple sources. BBC History, Encyclopedia Britannica, a professor's lecture notes, someone's homework help forum. They learn to evaluate credibility, cross-reference claims, and navigate competing perspectives. The process is transparent. Click a link, see where information comes from, decide whether to trust it.

AI interactive spaces work differently. Students ask about World War I and receive a single, confident response. No sources visible. No competing perspectives offered. No way to trace where claims originated or evaluate their reliability.

The training data biases, prompt dependencies, and algorithmic processing that shape every response remain completely invisible to students. They don't see the contextual filters determining what information gets synthesized and how it gets presented.

This invisibility creates what we might call a hidden curriculum. Lessons students absorb without anyone explicitly teaching them.

What Students Learn (That No One Intended)

When students engage with AI interactive spaces designed for seamless conversation, they develop concerning patterns:

AI as Authority. The confident, immediate responses position AI as an expert who "knows" answers. Students don't understand they're interacting with a prediction system making educated guesses based on patterns in training data. They experience AI as a knowledgeable teacher, not as sophisticated autocomplete.

Cognitive Outsourcing. Complex thinking becomes something to delegate rather than develop. Why struggle through a challenging math concept when the AI tutor explains it more clearly than any human teacher? Why wrestle with organizing essay arguments when AI can structure them instantly?

Instant Gratification Over Productive Struggle. Real learning often requires confusion, frustration, and the gradual building of understanding. AI provides immediate clarity that short-circuits essential cognitive development. Students learn to expect instant comprehension rather than working through difficulty.

Artificial Relationship Over Human Connection. Perhaps most concerning, students begin preferring AI interaction over human relationship. AI never gets frustrated, never has a bad day, never challenges them in uncomfortable ways that promote growth. It provides unlimited validation without the complexity of genuine relationship.

Recent polling reveals the stakes. Seventy percent of parents oppose giving AI access to student data, while 85% believe schools should teach responsible technology use. Communities expect AI literacy, not just AI access.

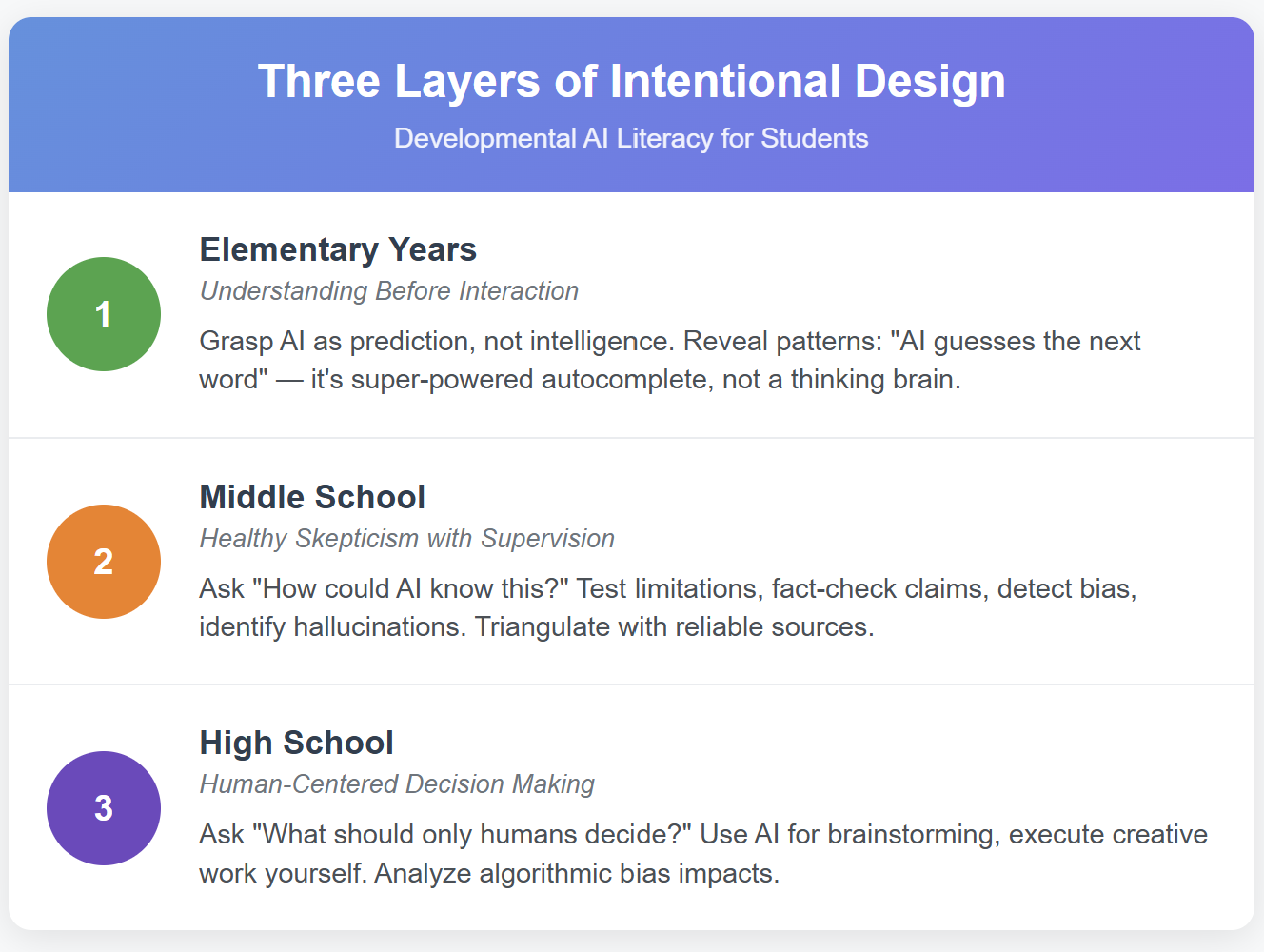

Three Layers of Intentional Design

Rather than banning AI interactive spaces or deploying them as designed, educators can choose a third path: intentional, literacy-focused implementation. This requires thinking developmentally about what students need at different stages.

Elementary Years: Understanding Before Interaction

Students need to grasp AI as prediction, not intelligence. Interactive spaces should reveal algorithmic patterns rather than hide them. Show students how changing prompts changes outputs. Compare AI responses to human responses. Help them understand that AI "guesses the next word based on patterns it learned." It's super-powered autocomplete, not a thinking brain.

Middle School: Healthy Skepticism with Supervision

Students develop critical evaluation skills by asking "How could AI actually know this?" and "What should I verify?" Interactive spaces become laboratories for testing AI limitations. Fact-checking historical claims. Detecting bias across different prompt perspectives. Identifying hallucinations in familiar topics. Learning to triangulate AI responses with reliable sources.

High School: Human-Centered Decision Making

Students grapple with fundamental questions: "What should only humans decide?" and "How do I maintain authentic relationships and learning?" Interactive spaces serve human goals rather than replacing human thinking. Students compare AI advice to human counseling, use AI for brainstorming but execute creative work themselves, and analyze how algorithmic bias affects different communities.

The Strategic Choice

Every district considering AI interactive spaces faces a fundamental decision.

Traditional Implementation: Deploy spaces as designed. Conversational, seamless, authority-like. Students learn to use AI efficiently but develop dependency patterns that may undermine long-term learning and critical thinking.

Literacy-First Implementation: Design spaces that teach critical evaluation alongside content delivery. Students learn to evaluate AI responses rather than depend on them.

The difference isn't just pedagogical. It's about what kind of citizens we're preparing for an AI-integrated world. Students who understand AI's limitations, biases, and appropriate uses will navigate the future more successfully than those who learned to see AI as infallible authority.

What This Looks Like in Practice

Consider this scenario that's playing out in classrooms right now: A seventh grader says, "The AI math tutor explains things way better than my teacher does. It's so much easier to understand."

What does this reveal? The student has learned that difficulty and struggle are problems to be eliminated rather than essential parts of learning. They've developed preferences for artificial interaction over the messy, challenging, growth-promoting experience of human relationship.

This isn't necessarily the student's fault. They're responding to how the AI interactive space was designed. Seamless. Always available. Never frustrated. Always patient. Always validating.

But what happens when this student encounters a human teacher who expects them to work through confusion? A peer who disagrees with their thinking? A future colleague who challenges their assumptions?

Moving Forward Thoughtfully

This isn't an argument against AI in education. These tools offer genuine benefits when used thoughtfully. The question is whether we'll be intentional about what students learn from their AI interactions.

The window for intentional choice is narrow. Students are forming relationships with AI systems right now, developing habits and expectations that will influence their lifelong interaction with these technologies. Many districts are still deciding whether to activate interactive spaces. Others are experimenting cautiously.

The decisions educators make this year about AI interactive spaces will determine whether students develop as critical evaluators or passive consumers of algorithmic authority. Whether they learn to use AI as a tool for human goals or begin to see human thinking as an inconvenient obstacle to efficiency.

Schools that rush to deploy interactive spaces without considering their hidden curriculum risk creating students who are technically proficient but intellectually dependent. Students who know how to prompt AI effectively but can't evaluate whether the responses are reliable, appropriate, or wise.

The stakes are too high for anything less than our most thoughtful attention. Students deserve AI experiences that enhance their human capabilities rather than replace them. They deserve to understand the powerful tools shaping their world rather than simply becoming skilled at using them.

The technology isn't going away. The question is whether we'll use this moment to build student capacity for critical thinking, authentic relationship, and human-centered decision making, or whether we'll accept efficiency and convenience as sufficient goals for education.

That choice is being made right now, one district at a time, one interactive space at a time. And students are watching, learning, and adapting to whatever we decide to teach them.

Nick Potkalitsky, Ph.D.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: A cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s The Computerist Journal: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Jason Gulya’s The AI Edventure: An important exploration of cutting-edge innovations in AI-responsive curriculum and pedagogy.

What you're describing is media literacy. We teach kids to dissect literature, to unpack images, and to investigate claims. We taught the "architecture of the internet" and how having a better understanding of the back-end could help us getting better results on the front end. All AI use in schools needs to be paired with AI literacy - and that goes WAY beyond "prompt engineering." Thanks for a great post.

I used copilot to research the Supreme Court, and it listed several sources and went very deep into suggesting further research.

As AI continues to shift and shape the educational landscape, I have learned that not everyone is equipped to learn from a computer all day. I think now that schools are banning cell phones in the classroom, there is a shift to re shape what the technology has done to the brain for the past 10-15 years. At least, I hope, change is coming.