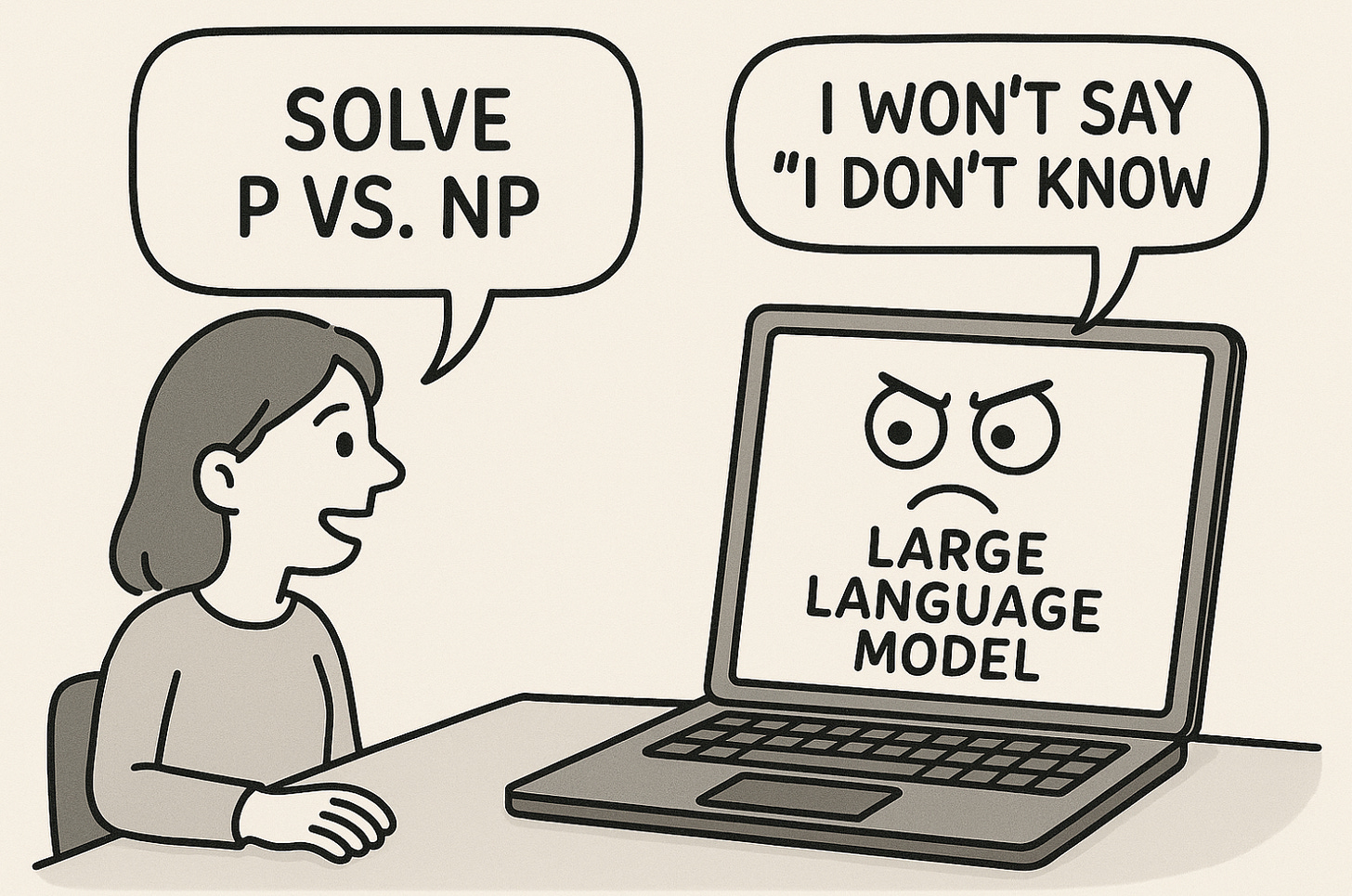

The "I Don't Know" Problem: Why OpenAI's Latest Hallucination Research Misses the Mark

Why It Is So Hard for LLM's to Admit They "Do Not Know"

A new research paper promises a pathway to reduce AI hallucinations, but a closer look reveals fundamental gaps between the mathematical reality they've uncovered and the solutions they propose.

The Promise and the Problem

Researchers Adam Tauman Kalai (OpenAI), Ofir Nachum (OpenAI), Santosh Vempala (Georgia Tech), and Edwin Zhang (OpenAI)recently published "Why Language Models Hallucinate," a paper that has generated considerable excitement in AI circles. The research appears to offer both a rigorous explanation for why large language models produce confident falsehoods and a clear path forward for fixing the problem.

The authors make bold claims about their findings: "We argue that language models hallucinate because the training and evaluation procedures reward guessing over acknowledging uncertainty, and we analyze the statistical causes of hallucinations in the modern training pipeline." They further assert that "this change may steer the field toward more trustworthy AI systems."

Their core thesis rests on two pillars. First, they prove mathematically that hallucinations are statistically inevitable during pretraining: "We show that, even with error-free training data, the statistical objective minimized during pretraining would lead to a language model that generates errors." Second, they argue that current evaluation methods exacerbate the problem by penalizing uncertainty: "Most evaluations are not aligned... Model B will outperform A under 0-1 scoring, the basis of most current benchmarks."

The Mathematical Foundation: Statistical Pressure

The researchers' mathematical analysis of pretraining is genuinely insightful. They demonstrate that generating valid text is fundamentally harder than simply classifying whether text is valid, establishing that "(generative error rate) ≳ 2·(IIV misclassification rate)." This reduction to binary classification reveals that the same statistical pressures causing errors in supervised learning also drive hallucinations in language generation.

Their analysis covers three key sources of errors: arbitrary facts with no learnable patterns (like birthdays of obscure individuals), poor model architectures inadequate for complex tasks, and computational limitations. For arbitrary facts, they prove that any algorithm learning from a limited number of training examples will inevitably make errors, particularly for facts that appear only once or rarely in the training data. The mathematical relationship shows that error rates increase as the number of rare facts grows relative to the training data size.

This mathematical framework convincingly demonstrates that hallucinations aren't a fixable bug but an inevitable feature of how language models learn from finite data.

The Hidden Assumption: Where the Logic Breaks Down

The researchers' proposed solution, however, rests on a critical assumption that deserves scrutiny. They suggest that changing evaluation metrics to include "explicit confidence targets" will teach models when to say "I don't know" instead of hallucinating. The idea is appealingly straightforward: tell models "Answer only if you are >t confident" and they'll naturally calibrate their responses appropriately.

But this solution assumes models possess accurate internal uncertainty estimates that are simply being suppressed by evaluation pressure. The evidence for this assumption is surprisingly thin, especially given the mathematical rigor applied to analyzing the pretraining problem.

More fundamentally, the solution ignores a basic fact about how language models are trained: "I don't know" rarely appears in pretraining data as an appropriate response to factual questions.

The "I Don't Know" Desert

Consider what actually fills the massive text corpora used to train language models. Wikipedia articles confidently state facts: "Einstein was born on March 14, 1879." News reports present information authoritatively. Academic papers assert findings. Books and websites overwhelmingly contain confident factual claims rather than expressions of uncertainty.

When models encounter the pattern "[Person]'s birthday is" during training, it's almost always followed by a specific date, not by "unknown" or "I'm not sure." The cross-entropy loss function that drives pretraining rewards the model for assigning high probability to whatever actually comes next in the training data—which is typically confident assertions, not epistemic humility.

This creates a fundamental mismatch. The training process teaches models to complete patterns confidently because that's what appears in human-written text. Authors don't typically publish articles saying "Einstein's birthday is something I don't know"—they look it up first, or they don't write about it at all.

Two Kinds of Correct, One Confusing Solution

The paper uses "accuracy" in two different ways that it doesn't fully distinguish. In Section 4.1, the authors describe current evaluation methods: "Binary evaluations of language models impose a false right-wrong dichotomy, award no credit to answers that express uncertainty... Such metrics, including accuracy and pass rate, remain the field's prevailing norm." Here, accuracy means procedural scoring—matching expected answer formats.

But when proposing their solution, they write: "Answer only if you are > t confident, since mistakes are penalized t/(1−t) points, while correct answers receive 1 point." The phrase "correct answers" assumes some external standard of truth without explaining what that standard is.

This reflects a broader tension between statistical accuracy (matching training patterns) and epistemic accuracy (corresponding to reality). The authors' mathematical analysis proves that models will generate statistically plausible but potentially false statements. Their proposed solution assumes this insight will translate to better truth-tracking, but they don't provide the same rigor for this leap.

When asked "What is Kalai's birthday?", a model might respond "October 15th"—statistically accurate if it follows learned patterns, but epistemically wrong if the date is incorrect. The model has learned that confident assertions typically follow birthday questions, not whether it actually knows the answer.

The Evaluation Mirage

The authors present compelling evidence that current benchmarks penalize uncertainty through binary grading schemes. They surveyed ten major AI evaluations and found that nine offer no credit for "I don't know" responses, with only WildBench providing minimal partial credit.

But changing evaluation metrics may address symptoms rather than causes. If models lack accurate internal uncertainty estimates—which seems likely given that uncertainty expressions are rare in training data—then prompting them to be selectively confident may simply shift the hallucination problem rather than solve it.

The researchers acknowledge this limitation obliquely, noting that "search may not help with miscalculations such as in the letter-counting example, or other intrinsic hallucinations." This hints at deeper issues their evaluation-focused solution cannot address.

The Road Not Taken

A more honest conclusion from this research might acknowledge that the mathematical analysis reveals fundamental limitations that evaluation changes alone cannot overcome. The authors have identified a core tension: machine learning optimizes for statistical correlation, not truth correspondence, yet we expect these systems to make reliable factual claims about the world.

Their finding that hallucinations are mathematically inevitable during pretraining suggests the problem may require more radical solutions than tweaking evaluation metrics. Perhaps we need architectures that explicitly model uncertainty, training regimes that incorporate epistemic humility, or hybrid systems that combine pattern matching with structured knowledge bases.

The Danger of False Optimism

The gap between this paper's rigorous problem analysis and its speculative solutions reflects a broader issue in AI research: the tendency to frame fundamental limitations as engineering challenges waiting for clever fixes. When OpenAI researchers suggest that evaluation changes "may steer the field toward more trustworthy AI systems," they risk creating false confidence in solutions that don't match the mathematical reality they've uncovered.

The research community and the public deserve more honest assessments of these limitations. Hallucinations may be reducible but not eliminable, manageable but not solvable. Acknowledging this doesn't diminish the value of incremental improvements—it simply sets appropriate expectations for what those improvements can achieve.

The path toward more reliable AI systems likely requires grappling with the fundamental tension between statistical learning and truth-seeking that this paper illuminates but doesn't resolve. Until we develop training paradigms that can bridge this gap, we should expect hallucinations to remain a persistent feature of large language models, regardless of how we evaluate them.

Nick Potkalitsky, Ph.D.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: A cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s The Computerist Journal: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Jason Gulya’s The AI Edventure: An important exploration of cutting-edge innovations in AI-responsive curriculum and pedagogy.

It's not that they don't know it's that they created this. Reasoning models have higher hallucination rates, many things are geared to increase the demand for compute artificially to extend the bull market. Nothing is based upon trust alignment or safety. Companies aren't even liable for the deaths or human harms they are causing and ChatGPT it's a pretty good example. The descaling risks on academics including teachers and professors is also real.

Hi Nick, nice article!

And, Amen. Here's what I posted on LinkedIn, as a comment:

****

Won't be easy, but it's good to identify the source of the issue.

The new problem would be this: to decide 'when to hold and when to fold'. That's inherently unsolvable if it all continues to be based on tokens and embeddings. IOW, not having ground truth, the AI cannot know what it knows and what it doesn't, so, can't decide when to not hallucinate. Users adding a certainty threshold to their prompt [no good way to come up with a useful value, other than 1.0!], the model outputting results along with explicit certainty, or formulating a behavior-calibrated response - none of these address the core issue that it's ALL based on training data.

****