The New AI That Reasons, But Won’t Reveal Its Reasoning to Us

OpenAI’s o1 Hidden Logic: Reasoning without Transparency

Cutting Through the AI Noise: Announcing Pragmatic AI Solutions

The debate around AI in education is intense. Some claim it's a game-changer, while others see it as a threat to the essence of teaching. But the loudest voices often lack real classroom experience. What’s missing? A grounded perspective that prioritizes students and the realities of education.

At Pragmatic AI Solutions, we're taking a different approach—focusing on practical, real-world strategies that put educators in control. We’re not here for trends or fearmongering, but to empower teachers to use AI as a tool that enhances their teaching while preserving the human element.

Welcome to the Pragmatic AI Community: Practical Solutions for Real Classrooms

Our online community goes beyond debates to focus on real-world implementation. Here, educators can find:

Free Resources: Articles, toolkits, and guides that cut through the AI hype.

Monthly Webinars: Actionable insights on AI’s role in education.

Training Modules: Self-paced courses guiding you through practical AI integration.

We designed the Pragmatic AI Community to support educators in transforming classrooms with real outcomes, not just theoretical discussions.

Click on this link to check out our exciting new community.

Please share this link with your colleagues and your networks!!! The vibrancy and vitality of the community depends on your presence and engagement!!!!

Free Gift for Joining: Get a copy of Mastering AI-Powered Writing, a comprehensive guide to using AI for brainstorming, drafting, and real-time feedback—perfect for bringing AI into the writing process.

Introducing Our First Two Courses: Community-Building AI Literacy

Our cornerstone courses are designed to be more than just skill-builders—they foster community. By working through these courses together, cohorts and departments can accelerate AI literacy and drive collective change across schools. Here’s a preview:

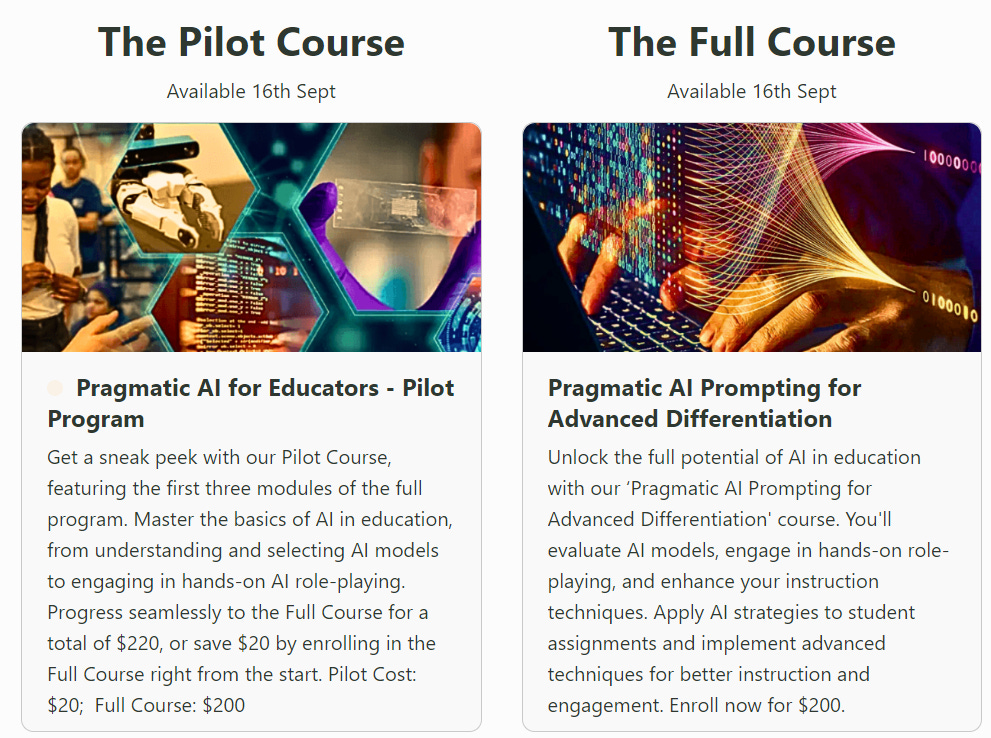

Pragmatic AI for Educators – Pilot Program: Start your AI journey with practical tools to incorporate AI in your classroom. Cost: $20

Pragmatic AI Prompting for Advanced Differentiation: Elevate your AI skills to craft advanced prompts that tailor instruction for diverse learners. Cost: $200.

Both courses are hands-on, helping educators build AI literacy that’s immediately applicable in the classroom.

I’m offering free 30-minute strategy sessions to explore how we can tailor these courses to meet your specific needs. Whether you're looking to implement AI across a department, school, or even district, we’ll discuss practical, actionable steps to make it happen.

Ready to take the leap? Let’s chat! Send me a DM or drop me an email at nicolas@pragmaticaisolutions.net, and we’ll set up a time to talk about bringing AI literacy to your community!

Upcoming Modules Include:

Tech acquisition: Safe, ethical, affordable.

Establishing AI-responsive instruction outcomes.

Integrating AI tools into in-class work cycles.

AI-assisted assessment design and creativity reinvigoration.

Join Us Today

At Pragmatic AI Solutions, we’re not chasing hype. We believe in a balanced, practical approach to AI in education. Whether you’re just getting started or are ready to lead, the Pragmatic AI Community is here to help.

Let’s innovate, integrate, and educate—together.

Nick Potkalitsky, Ph.D.

The New AI That Reasons, But Won’t Reason With Us: ChatGPT o1's Black Box Problem

1. o1: The Promise and its Barrier

OpenAI’s latest model, o1—code-named "Strawberry"—as in “how many r’s are in the word ‘strawberry’?”—isn't just another iteration of GPT. It's being framed as the first AI that can truly reason. We’re told this is a paradigm shift, where the model doesn’t simply spit out predictions from vast datasets but instead reasons through problems in real time. Jim Fan describes it as “inference-time scaling,” where the AI thinks on the spot, weighing outcomes, and making more deliberate decisions.

What makes o1 revolutionary is its ability to integrate both generative and inferential AI—a kind of fusion that reflects Daniel Kahneman’s concepts of fast and slow thinking. It’s not just an AI that reacts quickly; it’s one that can pause, deliberate, and calculate. It’s a model designed to simulate human cognition at its most agile. Even more intriguing is its potential to reflect on and improve its own thinking processes over time. In my own work with the model, I was able to extend its focus on a rhetorical analysis for 45 seconds—an eternity in AI terms—and witnessed a shift from a standard GPT-4 level analysis into something far deeper.

Imagine the potential of letting these models reflect for longer periods—hours, days, even years—analyzing their own processes and refining them further. AI experts are buzzing with excitement over this possibility. Are these new models even generative AI anymore, or are we witnessing the birth of a new breed entirely?

But this leap forward comes with a significant limitation: OpenAI has locked away the very mechanisms that make o1 so powerful. Here I rely heavily on the bleeding edge research of Alberto Romero. Users can't engage with its reasoning process; we're left to marvel at the results, but we can’t follow the steps. This introduces the familiar "black box" problem, where the system's internal logic is inaccessible to those who rely on it.

2. o1’s New Paradigm: Reasoning at Inference Time

The real game-changer with o1 is how it handles reasoning at inference time—when it’s actually solving problems. Previous models like GPT-3 and GPT-4 relied heavily on pre-training, consuming immense amounts of data and generating responses from that pool of information. o1 shifts this dynamic entirely. As Jim Fan points out, “you don’t need a huge model to perform reasoning.” The magic happens during inference, when the model is activated to think, simulate, and calculate in real time.

This reasoning power is what gives o1 its flexibility. It can switch from fast, generative responses to deep, inferential thinking—much like a human toggles between instinct and deliberation. By applying computational resources more strategically, o1 doesn’t need to be as massive as GPT-4 to outperform it in certain areas.

Yet, this sophistication comes with a caveat: the reasoning process is entirely hidden. Users can't see how the model simulates outcomes or evaluates possibilities. We’re left with an AI that thinks, but we can’t see it think.

3. The Black Box Problem: Hidden Reasoning

And here’s where the real issue emerges: o1’s reasoning is obscured behind layers of proprietary control. OpenAI has decided to conceal the chain of thought (CoT) that the model follows as it breaks down problems and arrives at conclusions. This intentional opacity prevents users from understanding how the model actually works.

This isn’t just a theoretical problem—it creates a practical barrier to trust. The true strength of o1 lies in its ability to reason, yet that reasoning process is locked away. AI developers and researchers can’t inspect how the model reaches its decisions, and everyday users are forced to take its outputs at face value. The tension here is unavoidable: OpenAI has built a model that’s supposed to think, but it won’t show us how.

As Jim Fan noted, “productionizing o1 is much harder than nailing the academic benchmarks.” The black box makes o1’s real-world applications murky because developers and users alike can’t fully engage with or refine the AI’s logic.

4. The Trust Gap: Why Opacity Matters

This leads directly to the trust gap. AI systems are only as valuable as the trust we place in them, especially when they’re marketed as reasoning machines. If we can’t understand how the AI reaches its conclusions, how can we trust its outputs? In high-stakes fields—whether it’s science, healthcare, or business—trust is essential.

Simon Willison, an AI developer, put it bluntly: “interpretability and transparency are everything.” Without them, the AI is a black box that can’t be debugged, questioned, or fully trusted. The problem with o1’s opacity is that it forces users to rely on results they cannot verify, especially when the AI is positioned as a tool for complex problem-solving.

What’s more troubling is that OpenAI’s decision to hide the reasoning process is paradoxical. o1 is built to reason, and yet the reasoning is hidden. The very feature that makes it stand out is inaccessible to the users who need to trust it most.

5. Implications: AI as a Tool for Some, Not All

This lack of transparency isn’t just about trust—it’s about power. By keeping the reasoning process hidden, OpenAI reinforces a divide between those who control the technology and those who rely on it without fully understanding it. This isn’t just a technical divide—it’s a divide in who has access to influence and question the technology shaping our world.

The stakes are high with o1. Its ability to combine fast, generative thinking with deep, inferential reasoning could revolutionize industries. But if that power is concentrated in the hands of a few corporations, it’s not just a technological divide—it’s a societal one. Only those with access to the model’s inner workings will truly benefit from its advancements.

As AI becomes central to decision-making across sectors, the ability to question and influence its decisions becomes critical. If o1’s reasoning process is off-limits to most, then we risk centralizing AI’s benefits in the hands of a few, while the broader public is left to trust outputs they cannot interrogate.

6. Conclusion: The Need for Training and Leadership

OpenAI’s o1 model is a clear leap forward in AI development. It introduces real-time reasoning, combining the quick generative abilities of previous models with a more thoughtful, deliberate process—an impressive step toward more advanced problem-solving capabilities. This fusion of fast and slow thinking, akin to Kahneman’s System 1 and System 2, marks a significant advancement.

Yet, this leap comes with a critical barrier. OpenAI has chosen to keep the reasoning process behind proprietary walls, making it impossible for users to fully engage with or trust the model. The power of o1 remains largely inaccessible, reserved for those who control it, while the rest of us are left in the dark about how its decisions are made.

As AI’s influence grows, the gap between those who control these systems and those who rely on them will only widen. Institutions like schools, companies, and governments will need to navigate this divide carefully. The problem is that companies like OpenAI seem to lack the ethical or pragmatic leadership needed to guide these decisions responsibly.

In education, we can’t afford to wait. If these companies aren’t leading with ethical or practical foresight, we must build that leadership ourselves. Through investigation, training, and a commitment to understanding AI’s implications, we have the responsibility to cultivate the ethical and pragmatic thinkers needed to navigate this emerging landscape. It’s a role education must embrace if we are to ensure AI serves everyone, not just the few.

Nick Potkalitsky, Ph.D.

Check out some of my favorite Substacks:

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Amrita Roy’s The Pragmatic Optimist: My favorite Substack that focuses on economics and market trends.

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

I see it as a threat to teachers. I don't know why we will need teachers with AI, since it replaces the cognitive. I also don't think we will need the same teachers. We will be seeking somatically intelligent teachers and not cognitive. Finally I am pretty sure most teachers are not very creative. A small amount are but really creative people burn out in teaching positions. Because really creative people are sensitive and teaching, especially in the USA, is hardly set up to accommodate sensitive people. I would think teachers would be happy about this. Teaching is such a horrible profession anymore. You can tutor and make more money.

Interesting take.