The Three Pathways to AI Source Literacy Method

Teachers and students need a classroom-tested methodology for evaluating and incorporating AI-generated materials into their learning process.

I just wanted to thank you all for another great year at Educating AI. We are rapidly approaching the 4000 subscriber mark, and have reached over 6000 followers at this point. 2024 has truly been a year of insight and meaningful collaboration at Educating AI. A heartfelt thanks to all our guest writers::

, , Elliot Bendoly, , , , Alan Knowles, , , , , , , James Hammer, and JC Price.For those considering upgrading to a paid subscription, we're offering a special 20% off lifetime discount if you sign up before Dec. 31st. During this same period, I'm also offering discounts on our online, AI Course Bundle. Remember to register with an email to access the courses, and please reach out if you encounter any sign-in issues.

Looking ahead to 2025 - if you're interested in contributing to Educating AI, I'd love to hear your ideas at nicolas@pragmaticaisolutions.net. As always, I'm excited to grow our network and continue the important work we do here.

The Illusion of Effortless Knowledge

The rapid adoption of AI writing tools has created an illusion of effortless knowledge production. Ask a question, get an answer. Request a draft, receive polished prose. But this seamless efficiency masks a critical problem: we're losing the essential friction that makes learning and writing meaningful. We need a framework that deliberately reintroduces careful source evaluation into our AI interactions.

The Three Pathways to AI Source Literacy Method emerged from observing how both teachers and students engage with AI tools. What became clear wasn't just how these tools are used, but how their apparent efficiency can bypass crucial moments of verification and critical thinking. Today's AI models, trained on vast but unvetted internet data, present information with a confidence that masks their underlying uncertainty. This isn't a minor flaw—it's a fundamental characteristic that demands a new approach to source literacy.

Rather than treating AI interactions as simple input-output exchanges, we need a cognitive architecture that builds in moments of resistance and reflection. When we understand how to move between exploratory, targeted, and generative modes of engagement, we can better integrate traditional source verification with AI's pattern-matching capabilities. This framework isn't about slowing down the process artificially—it's about maintaining the rigor that meaningful learning requires.

Understanding the Three Pathways

The Three Pathways to AI Source Literacy Method operates through three distinct modes of engagement:

Exploratory Inquiry: Our first engagement with AI for understanding real-world information, requiring careful verification of AI-generated claims against authoritative sources.

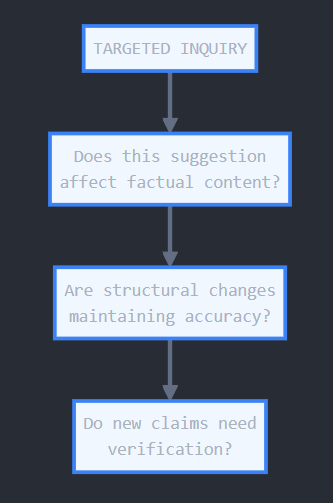

Targeted Inquiry: Using AI to refine existing work while remaining alert to moments when refinement requires renewed fact-checking.

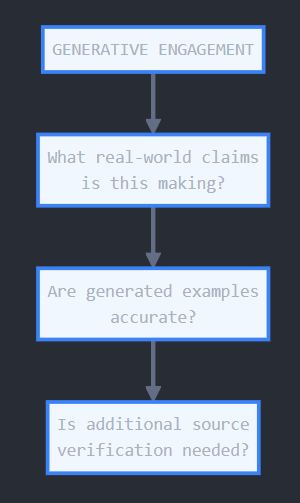

Generative Engagement: Collaborating with AI to create new content, maintaining constant verification of any claims about the real world.

What makes these pathways significant isn't just their universal application, but how they create necessary moments of friction in our AI interactions. When a teacher uses AI to explore a historical event, they engage in the same critical source verification as a student researching that topic. When crafting essay prompts or strengthening arguments, both teachers and students must navigate between AI's pattern-matching suggestions and verified information. These parallel experiences reveal how source literacy remains crucial across all levels of AI engagement.

Exploratory Inquiry: The Core of Source Literacy

Exploratory Inquiry represents our initial engagement with AI for understanding real-world information. But unlike traditional research, where the effort lies in finding sources, AI presents us with instant, confident responses about any topic. This immediacy creates our first critical challenge: maintaining rigorous source verification when the technology seems to eliminate its necessity.

When teachers probe AI about historical events or students query it about scientific concepts, they receive responses that sound authoritative and well-structured. Yet these responses emerge from AI's pattern matching across unvetted internet data, not from careful source evaluation. A response about World War I might merge different historical accounts without attribution. An explanation of climate change might mix current research with outdated data. The AI's fluent writing style can mask these fundamental issues of source reliability.

This isn't just about fact-checking individual claims. It's about maintaining the habit of source verification in an environment designed for speed and convenience. While we await more sophisticated AI research tools built on carefully curated datasets, we must treat AI responses as starting points for investigation, not endpoints. This means cross-referencing with authoritative sources, identifying potential gaps or biases, and maintaining healthy skepticism about any factual claims.

Exploratory Inquiry thus serves as our first line of defense against the erosion of source literacy. It reminds us that understanding complex topics requires more than pattern matching—it requires careful evaluation of where information comes from and how it's verified. This careful attention to sources creates the necessary friction that meaningful learning demands.

Targeted Inquiry: When Refinement Returns to Research

Targeted Inquiry appears to shift our focus from source verification to refinement of existing work. Teachers and students begin using AI to analyze and improve specific aspects of their writing or thinking. A lesson plan needs tightening, an essay needs structural revision, an argument needs clarification. The initial urgency of fact-checking seems to fade as we engage with questions of form and effectiveness.

Yet this apparent shift masks a deeper connection to source verification. As we engage in targeted revision, questions of interpretation and factual accuracy naturally emerge. A structural suggestion raises questions about historical sequence. A stylistic change requires verification of current data. What begins as refinement often leads us back to checking sources. This isn't a detour—it's a natural part of thorough revision.

The AI's pattern-matching capabilities can help identify gaps in logic or clarity, but addressing these gaps often requires engagement with source material beyond the AI's training data. We find ourselves moving fluidly between refinement and research, between improving structure and verifying claims. This cycle maintains the essential friction that prevents AI tools from flattening the complexity of real-world information into mere pattern completion.

Generative Engagement: The Source Dilemma

Generative Engagement presents our most complex relationship with AI tools. The technology's ability to instantly produce fluent, well-structured content creates a powerful temptation to bypass source verification entirely. A lesson plan appears complete, an essay emerges fully formed, a creative piece flows seamlessly. Yet this apparent completeness masks a crucial challenge: generated content often makes claims about the real world that require careful verification.

This verification challenge manifests differently in generative work than in exploration or refinement. Here, fact-checking isn't just about accuracy—it's about the integrity of the creative process itself. Every generated piece contains assertions, assumptions, and connections that need evaluation. A historical fiction piece requires period accuracy. An argumentative essay needs current data. A science lesson plan must reflect up-to-date research. These aren't secondary considerations but essential elements of effective content generation.

The AI's fluent writing can create an illusion of authoritative knowledge, making source verification feel unnecessary or even disruptive to the creative flow. This is precisely where we need to introduce intentional friction. Generated content requires constant cycling between creation and verification, between fluid production and careful fact-checking. The strongest work emerges not from seamless AI integration, but from this deliberate dance between generation and source validation.

The Necessity of Friction

The Three Pathways to AI Source Literacy Method isn't just a framework for using AI tools—it's a cognitive architecture that preserves essential habits of source verification in an age of effortless content generation. As we move between exploratory, targeted, and generative modes, we repeatedly encounter moments that demand careful attention to sources. These moments of necessary friction prevent AI's pattern-matching capabilities from replacing genuine engagement with factual accuracy.

The framework's value lies not in separating these pathways but in recognizing their constant interplay. A question about facts leads to refinement of ideas; an attempt at generation cycles back to verification; targeted work raises new questions requiring exploration. This fluid movement between modes naturally maintains the rigor that meaningful learning and creation demand.

While we await more sophisticated AI research tools built on curated datasets, this approach offers a practical way forward. It suggests that effective AI use isn't about maximizing efficiency but about maintaining critical awareness throughout our interactions. The strongest work emerges when we embrace rather than eliminate the friction of source verification—when we treat AI as a collaborator in a process that remains grounded in careful evaluation of real-world information.

Check out some of my favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

I love your work and have referred to it in my professional communication. However, I am hoping that the copyright mark in your post is intended to be facetious since ideas cannot be copyrighted. By default, everything you create is copyrighted, but your demarcation suggests that you intend to "possess" the word combination as a trademark that cannot be used by anyone else, similar to how "3-peat" is trademarked.

Keep up the excellent work.

It's a very useful framework, and I'm looking forward to keeping up with its maturation.