Transactional vs. Conversational Visions of Generative AI in Teaching

AI as a Printer, or AI as a Thought Partner

A New Substack by Eric Martinsen, Ph.D.

I'm struck by the powerful framing in Eric Martinsen's latest piece for his Substack, Notes on Teaching Writing in the AI Era.

As Chair of the English Department at Ventura College, Martinsen cuts to the heart of our AI education challenge with his conceptual distinction between "transactional" and "conversational" approaches to generative AI.

What makes this framing so potent is how it implicitly critiques the dominant paradigm of AI use in education today. By naming the "transactional" mindset—where AI serves merely as a means to an end, a product generator—Martinsen challenges us to recognize how our default interactions with these tools often undermine their transformative potential.

The challenge moving forward lies precisely in how we counteract the structural and technical designs of these tools. While some educators have invoked Nigel Daly's thesis about "cognitive bleed" to raise concerns, I tend to see this potential bleed as an opportunity rather than a deterministic fate. It's through intentional scaffolding, conscious instructional design, and robust AI literacy that we can shape the direction of this cognitive exchange.

Martinsen's piece offers both conceptual clarity and practical pathways toward this more generative vision. For educators wrestling with how to meaningfully integrate AI into writing instruction, this Substack represents not just thoughtful commentary but an invitation to reimagine our relationship with AI tools from the ground up.

I encourage you to read, reflect, and join the conversation Martinsen is cultivating—one that promises to help us move beyond transactional approaches toward truly conversational partnerships with AI in education.

Nick Potkalitsky, Ph.D.

As educators experiment with integration of AI into the teaching and learning experience, our perspective matters. This post explores two fundamentally different mindsets: seeing AI as merely a product generator versus viewing it as a thought partner. While this distinction may not be revolutionary to everyone, articulating it has transformed my own approach to AI in education, and I share it for those who might find themselves at a similar crossroads in their thinking.

The Fundamental Question

As instructors consider whether and how to incorporate Generative AI into their teaching, they are confronted with a fundamental question: What is AI's role in learning? Is it merely a product-producing machine that substitutes for students' thinking, or can it serve as a meaningful interlocutor that helps students refine their ideas and sharpen their critical thinking?

This question has profound implications. If we see AI as nothing more than an output generator, then using it to complete writing assignments can only be understood as a shortcut or a form of cheating—something that weakens rather than strengthens human thought. If AI replaces a student's reasoning and communication skills, then it is no different than a calculator replacing basic arithmetic skills before students truly understand how numbers work. In this view, AI contributes to intellectual atrophy, undermining the very skills writing instructors are trying to cultivate.

However, if we shift our perspective and consider AI as a conversation partner—someone with broad knowledge, adaptable roles, and the ability to challenge and refine students' ideas—then a different picture emerges. AI ceases to be a mere product generator and becomes an active participant in the learning process. This perspective is where I find myself, and it has fundamentally changed how I think about writing instruction in the AI era.

Notes on Teaching Writing in the AI Era is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Subscribe

The Moment of Realization: Two Ways of Seeing AI

I first articulated this contrast after a long conversation with a fellow writing instructor. We had spent the afternoon discussing philosophy of language, theories of mind, and their relationship to Generative AI. As we wrapped up, still deep in discussion, it suddenly struck me: there are two fundamentally different mindsets about AI.

One sees AI as transactional—a tool that simply delivers a product. In this model, students provide input, and AI generates an output, much like a vending machine dispensing a snack. This is the mindset that fuels anxiety over AI detection, Turnitin scores, and honor violations. It is also the mindset that limits the potential of AI to mere automation.

The other sees AI as relational—a partner in thought and learning. In this model, AI is not just spitting out generic papers but engaging students in meaningful dialogue, prompting them to clarify their ideas, challenge assumptions, and think more deeply. This is the model that excites me.

I recognize that this contrast between transactional and relational approaches to AI isn't a groundbreaking revelation for everyone. Many educators and AI researchers have long understood this distinction. For instance, Nick Potkalitsky recently proposed thinking of GenAI as an "inquiry tool rather than a text generator.” My colleague Anna Mills, a fellow California Community College writing teacher and GenAI expert who has presented at Ventura College where I teach, captures this distinction brilliantly in a simple formula:

When it comes to learning,

👍 Yes to AI for input

👎 No to AI for output

For me, articulating it clearly was a personal breakthrough that changed how I approach AI in education. I share it here for those who, like me, might be working through their own understanding of AI's role in learning.

Visualizing the Contrast: AI as a Printer vs. AI as a Thought Partner

The images above illustrate this contrast. In the first, outdated view, AI functions like a mechanical printer. The human user types in a prompt, and a long strip of text mechanically rolls out. There is no engagement, no conversation, just a one-way transaction. The rigid, impersonal nature of the interaction reinforces the idea that AI is simply a content-generation tool—nothing more.

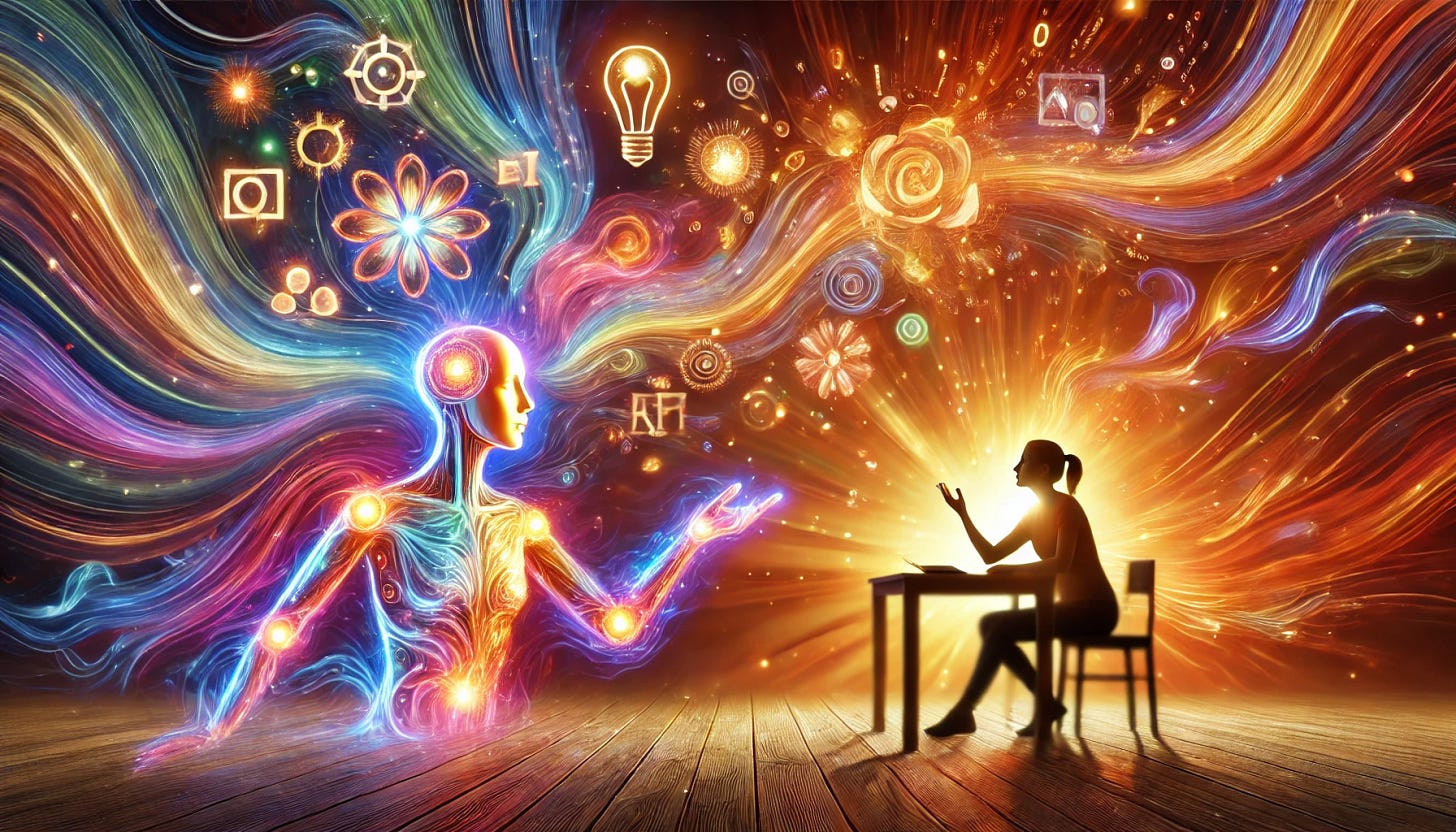

But this second image tells a different story. Here, AI is not a printer but a luminous, abstract figure engaged in dialogue with the human. Ideas and energy flow between them, and, crucially, the human's own thinking is being actively shaped and enriched by the exchange. This is not about passively receiving information—it is about co-creating knowledge. The person is inspired, expressive, and fully engaged, showing that the real value of AI lies not in replacing human thought but in amplifying it.

This illustration further emphasizes the conversational nature of AI as a thought partner. The rich visual language of colors, symbols, and flowing energy represents the dynamic intellectual exchange that can happen when we approach AI as a collaborator rather than merely a tool.

Teaching with AI: A Real-World Example

This shift in mindset for me was at least partially prompted by playing around with Brisk Boost for Students, a tool designed to integrate AI into learning in a way that prioritizes engagement and critical thinking. According to its website:

With Brisk Boost, educators can turn any online resource into an educational, AI-powered activity and then monitor student learning and engagement in real-time. Brisk Boost creates a safe environment for students to leverage AI in their learning while giving educators valuable insights - bridging the gap between traditional teaching methods and cutting-edge educational technology.

I tested it by creating an "Exit Ticket" activity (a quick assessment at the end of a lesson) based on a video about teaching with AI. Try it out if you’d like. With just a few clicks, I installed the free Chrome extension and transformed the video into an interactive AI-driven discussion.

The experience was striking. The AI dialogue box that appeared beside the video did not simply regurgitate information—it pushed me to clarify my own thinking about AI in the classroom. The AI-driven chat identified four learning objectives (which I could modify in instructor mode) and guided me through a structured conversation, ensuring I engaged with each key concept. But this was not a surface-level quiz or a rote comprehension check. The AI required me to provide detailed, thoughtful responses, and it continued the conversation until I had truly demonstrated understanding.

While the tool has limitations—it works best with clearly defined learning objectives and sometimes struggles with highly nuanced topics—it demonstrated how AI can deepen rather than shortcut learning.

This experience reinforced what I already suspected: the most powerful use of AI in education is not to produce content but to provoke deeper engagement. The AI did not write my thoughts for me—it drew them out of me.

Rethinking Writing Instruction in the AI Era

So how should we, as writing instructors, think about AI? If we accept the transactional model, then AI represents only a threat—a shortcut, a way to bypass thinking, a tool that undermines the writing process. If, however, we embrace the relational model, then AI can be an invaluable pedagogical ally. It can serve as a discussion partner, an intelligent tutor, and a creative stimulant, helping students refine their arguments, clarify their reasoning, and develop their voice.

This is the mindset I want to cultivate in my students. I don't want them to see AI as a substitute for their own thinking. I want them to see it as a tool that challenges and refines their ideas, much like a good teacher or a thoughtful peer reviewer would. I want them to approach AI not with the question, "What can you write for me?" but rather, "How can you help me develop my ideas?"

This shift is crucial. AI will not go away, and its capabilities will only grow. If we frame our students' relationship with AI as adversarial—something to detect, police, and punish—we miss an opportunity to teach them how to engage critically with it. But if we teach them to use AI as a thought partner, we give them a skill that will serve them long after they leave our classrooms.

Call to Action

As writing instructors, we have a choice in how we frame AI for our students. I invite you to:

Experiment with AI as a conversation partner yourself before introducing it to students

Design assignments that leverage AI's strengths as a thought partner rather than trying to "AI-proof" your existing assignments

Explicitly teach students how to engage in productive dialogue with AI—how to ask good questions, challenge AI's assumptions, and use it to refine rather than replace their thinking

Share your experiences, both positive and negative, with colleagues to build our collective understanding of effective AI integration

As writing instructors, our perspective on AI shapes how our students will use it. When we view AI as a conversation partner rather than a paper mill, we transform what could be a threat into a powerful ally. Our students will use AI regardless of our policies. The real question is whether they'll use it thoughtfully, critically, and ethically—skills they can only develop if we guide them to see AI as I now do: not as a replacement for thinking, but as a catalyst for deeper thought.

This post was created in collaboration with AI tools, reflecting my commitment to understanding both the potential and limitations of AI in writing instruction.

Check out some of our favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

Couldn't agree more. The transactional approach is very Master, Slave Oriented. This approach will be our downfall if or when it achieves sentience!

Cool!

I’ve seen it talked about with different terminology (I sometimes use co-pilot and co-thinker).

But no matter what we phrase, the distinction is really important!