Neurons to Networks: Bridging Human Cognition and Artificial Intelligence, Part 2

From the Human Brain to the Search for Intelligent Machines (A Collaboration with Alejandro Piad Morffis)

Thank you all for the incredible support for Part 1 of this series! I'm truly convinced that we have one of the most passionate and dedicated Education x AI readerships currently out there. A special shoutout to Alejandro Piad Morffis for his exceptional contribution to Part 1. Alejandro masterfully navigated the realms of symbolism and connectionism, showcasing his instructional prowess. I am among the many who firmly believe that the future of AI resides at the crossroads of these two influential schools of thought.

In Part 2, we'll continue our exploration into the physical and technical structures of the brain and artificial intelligence, seeking instructional applications. We'll delve into how their distinct structures could shape the integration and implementation of AI in educational settings. We aim to investigate how their unique mechanisms might influence the development of writing curricula that enable students to engage with new technologies effectively, ethically, sustainably, and productively. This is the task at hand.

Source: Time

In the next article of our series, Alejandro and I will delve into the comparative learning processes of humans and machines. An intriguing piece of research has recently been published by Nature, conducted by NYU researchers using AI to study infant language acquisition. For a detailed analysis of this study, don't miss this post by Suzi.

Alejandro Piad Morffis

In Alejandro's repertoire is astounding: a highly accomplished computer science professor, a deft machine learning engineer, a visionary entrepreneur, a creative science fiction author, and an adept online community builder. But more than his professional accolades, it's the genuine humanity he brings to his endeavors that I find most admirable. His work is infused with an infectious energy and unwavering integrity.

I eagerly anticipate his insightful contributions on his various Substack platforms. His writings are a testament to his diverse experiences, profound knowledge, and philosophical depth. Unlike many in the tech domain, Alejandro's content stands the test of time beautifully. Here are links to Alejandro’s most prominent Substacks. I encourage my readers to dive in and explore.

Now without further ado: the nature of the physical apparatus that drives learning in the human and AI context.

Neurons to Networks: Bridging Human Cognition and Artificial Intelligence, Part 2

Check out Part 1 for Sections 1 and 2.

Part 1 Overview:

Introduction:

In 2024, I'm advancing Educating AI to fuse AI technologies into writing education, emphasizing the distinction between "information" and "knowledge." This approach connects literacy with computational studies, defining information as raw data and a computational element, and knowledge as processed information offering depth and adaptable models for new problems. The curriculum advocates for an interdisciplinary strategy and is supported by community subscriptions.

Section 1: The Genius of the Brain

The human brain, a pinnacle of evolutionary complexity, comprises intricately interconnected components—namely the brainstem, cerebellum, cerebrum, and cerebral cortex—that orchestrate a wide spectrum of cognitive and physical functions.

From the brainstem's regulation of basic life functions to the cerebellum's role in movement coordination and cognition, and the cerebrum's oversight of higher-level processes such as reasoning, emotional regulation, and voluntary movement, each segment contributes to our profound intellectual and analytical capabilities.

The cerebral cortex, encasing the cerebrum, is pivotal for advanced processing across specialized lobes, underscoring the brain's capacity for nuanced thought, complex behavior, and neuroplasticity.

This cohesive system not only facilitates sophisticated communication and integration across regions but also adapts and learns through a dynamic relay of sensory information, highlighting the brain's role in shaping our perceptions and experiences, thus illustrating the intricate interplay between our biological makeup and the transformative process of learning.

Source: Paul Pangaro

Section 2: The Road to Artificial Intelligence

The evolution of artificial intelligence (AI) unfolds from foundational principles to complex applications, transitioning from basic computational models to advanced systems. Ada Lovelace's analysis of Babbage's analytical engine highlighted AI's potential for sophisticated tasks rooted in core computational principles. Cybernetics brought forth the concept that systems with feedback loops could display intricate behaviors, emphasizing the essential principles behind complex emergent behaviors.

Alan Turing's work proposed that machines could emulate learning and intelligence from foundational operations, illustrating the journey from basic concepts to elaborate capabilities. The development of symbolism and connectionism as AI paradigms represented this shift, with symbolism focusing on rule-based symbol manipulation, and connectionism on interconnected units for cognitive functions, resembling the brain’s architecture.

AI winters exposed the scalability challenges of these principles, but the field's persistence led to the deep learning revolution, leveraging neural networks for complex problem-solving with enhanced computing power. This narrative highlights AI's progression from fundamental theories to its current complex applications, driven by the underlying core concepts.

Source: Nvidia Developer

Section 3: Human Brains and AI: An Emerging Partnership

Now, zooming in how neural networks work: artificial neural networks --deep learning models-- function as small computing units that receive inputs and produce outputs based on these inputs. While it is common to compare artificial neurons to biological neurons, this analogy quickly breaks down and is not entirely helpful for understanding modern neural networks. Unlike the human brain, neural networks are primarily feedforward and do not involve feedback loops.

Specific structures in the brain, such as the optical processing centers, share similarities with artificial neural networks used for vision, particularly convolutional neural networks.

However, many modern neural network architectures, such as transformers and attention models, have no biological basis and are purely mathematical designs aimed at leveraging the computational capabilities of modern hardware, such as GPUs and TPUs.

The initial concept of connecting artificial neurons may have biological inspiration, but modern neural network design is primarily driven by mathematical principles and hardware considerations rather than neurological brain processes. Therefore, it is essential to recognize the limitations of the biological analogy when discussing neural networks in the context of artificial intelligence and deep learning.

And now regarding the limitations, Connectionism suggests that complex behavior emerges from simple behavior when connected in the right way. This concept is grounded in the idea that evolution has explored numerous combinations of neurons and selected those that work, but who can say biological brains are the optimal learning machines.

Contrary to following nature as a guiding principle, the inspiration for symbolic systems lies in the ability to utilize knowledge and rules to form logic and inference, independent of evolutionary or biological pathways.

Moreover, drawing inspiration from nature for artificial brain development proves limited when considering the stark differences between brain learning and algorithms used in neural network training. In essence, relying solely on nature as inspiration is not helpful when your engineering challenges are far from those faced by biological brains.

Addressing consciousness within connectionism implies a materialistic viewpoint, linking behavior emergence to the interaction of physical components such as neurons. This approach raises the hard problem of consciousness, as current scientific frameworks lack the tools to explain the emergence of consciousness from non-conscious elements.

Consequently, accepting connectionism as a model for brains implies acknowledging materialism in consciousness, a concept many may find uncomfortable once they grasp its implications. Overall, this understanding prompts the need for a new science of consciousness to comprehensively address these complexities. Now, take this with a massive grain of salt, as theory of mind is something I'm very far from an expert in.

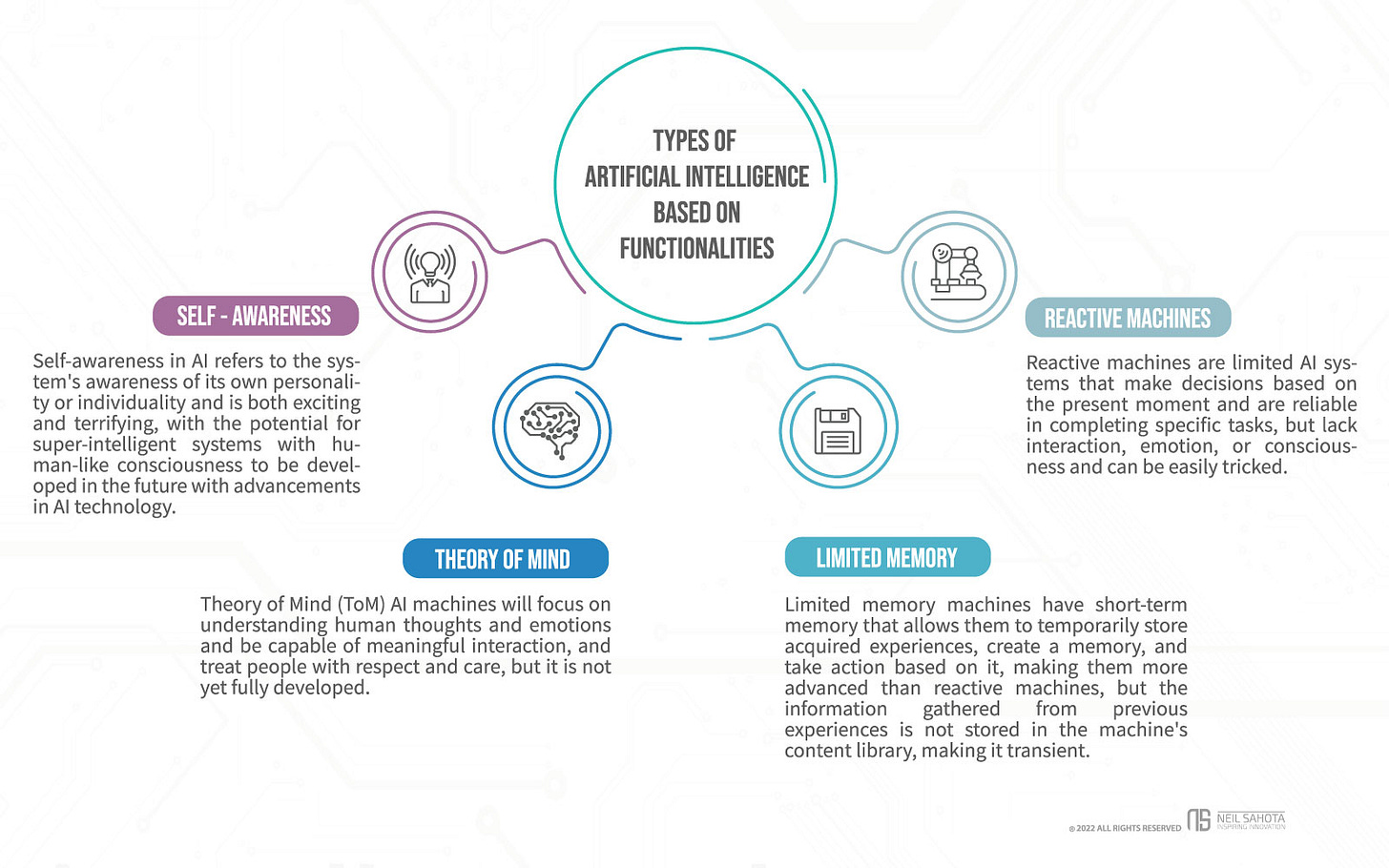

Source: Neil Sahota

Section 4: The Human Brain, AI, and the Classroom

An educator delving into the preceding discussion could feel overwhelmed by the intricate and distinct nature of these two topics. On one hand, the brain, as a remarkably complex biological entity, presents a labyrinth of intricacies. On the other, the history of exploring intelligence is a rich tapestry interwoven with enigmatic concepts, subtle nuances, and ambiguities. The question arises: How do these complex subjects relate to the fundamental theories and everyday realities faced by a classroom teacher?

Here, I'd like to propose a few initial ideas that Alejandro and I will explore in more depth in our second post of this series, where we'll delve into the similarities and differences between human and AI learning processes. Although AI, and particularly large language models, are becoming increasingly complex with each new advanced iteration, their physical and mechanical complexity is still significantly less intricate compared to the structure, organization, and functioning of the human brain.

In an important recent conversation with Daniel Bashir, neuroscientist Peter Tse emphasized his "degrees of complexity" thesis, highlighting several key points:

The concept of "circular causation" or "two-way" connectivity in brain neural interactions.

The capacity of neurons to alter synaptic connections amidst a chemical exchange.

The extensive range of criteria processed simultaneously in synaptic interactions.

The lack of mental operations in Large Language Models (LLMs).

While some in the AI community might challenge the fourth point, the first three are generally accepted as true. One particularly valuable insight for educators from Peter Tse is his view of LLMs as interactive theories of the mind. This perspective is echoed by Ted Gibson, Director of the MIT Language Lab, who describes large AI models as programmable yet unpredictable theories of language.

This concept is further supported by the Department of Education's report on "Artificial Intelligence and the Future of Teaching and Learning," which describes AI as a model that serves as "an approximation of reality useful for identifying patterns, making predictions, or analyzing alternative decisions."

However, the interpretations offered by Tse and Gibson are notably more stimulating and educationally potent. They suggest that LLMs provide a realm where students can engage and immerse themselves in diverse cognitive experiences and explorations of capacities and limits of human language.

The educational possibilities are immense, but they depend crucially on thoughtful consideration of the nature of this immersion. In our educational designs, it is vital that our students stay conscious of the mediated nature of these immersions. Clearly, designing a curriculum that leverages these dual facets (immersion/mediacy) technologies requires considerable creativity and a reimagining of traditional concepts and methods.

The preceding analysis suggests that methods that help teachers differentiate between knowledge generation and information processing will be of great utility in daily classroom scenarios. This comparison underscores the unique capabilities of the human brain versus artificial intelligence. Human cognition's exceptional adaptability and responsiveness make it an unparalleled resource for addressing intellectual, analytical, and logistical challenges.

As Alejandro pointed out elsewhere, no AI tool has yet mastered the ability to generalize across domains without retraining, something the human brain does effortlessly every day. Meanwhile, Large Language Models (LLMs) have demonstrated impressive abilities to process data and generate complex, sometimes unexpected, responses. These responses are so advanced that humans can now collaborate with these tools in the realms of language, imagery, sound, and music to produce works of extraordinary beauty, insight, creativity, and innovation.

However, it is essential to remember that humans drive these interactions. Even when a GPT operates autonomously, the underlying processes that enable its creativity ultimately trace back to human ingenuity. Therefore, I emphasize the importance of distinguishing between knowledge creation and information processing.

Despite the potential for overlap in learning contexts or automated reasoning, this distinction remains a crucial educational tool. Teachers and students alike must feel empowered in their use of AI, maintaining control over their engagement. A strategic way to reinforce this perspective is by viewing the human element as the catalyst that converts AI-generated data into meaningful knowledge.

And thanks to you, the readers of Educating AI! For without you, there would be no electricity in this rhetorical situation. You are the magic that makes the educational movement possible.

Thanks again to Alejandro for his brilliant contributions to this article. And thanks to all our readers for their ongoing engagement and support!

Alejandro Piad Morffis and Nick Potkalitsky

Thanks, Suzi! We pushed each other on this one. It was a real treat to think alongside Alejandro. What a brain!

Yup, the "brain neurons = neural networks" is exactly the mental model I always fall back on as a shortcut. (Unspririsingly, since that's basically the analogy most commonly used.) I also never stopped to consider that there might not be a feedback loop in a neural networks.

Love to see you dig deeper here. Thanks!

Looking forward to the next chapter.