The Value of Doing Things

What AI Agents Mean for Teachers: A Guest Post by Jason Gulya

Join Mike Kentz and me for our upcoming Webinar, “Empowering Teachers to Shape AI in Education” on Wednesday, Nov 18, 12-1pm!!!

Registration Link Here!!!

Our new eBook, AI in Education: A Roadmap for Teacher-Led Transformation, is designed to spark proactive change among educators, offering ethical and practical tools to build AI literacy.

Nick’s Introduction:

I'm thrilled that Jason agreed to write a guest post for Educating AI! Jason Gulya, Professor of English and Applied Media at Berkeley College in New York City, brings incredible expertise to this conversation. He sits on numerous AI Higher Ed Leadership Councils, runs a highly successful Higher Ed AI consulting service, and has become one of our most important thought leaders on AI x Education. His insightful posts reach a network of 30,000 LinkedIn followers.

I’d highly encourage readers to sign-up for Jason’s Substack:

I love how Jason describes his AI journey in his LinkedIn bio with characteristic honesty: "

My life changed when ChatGPT was released.

I remember playing with the platform, and then turning to my wife to say.

'I just found the most awful thing. I found the future of plagiarism.' I'm not particularly proud of that moment, now. But I share it because it gives a sense of where I started.

Since then, I've spent hundreds of hours on ChatGPT and similar AI programs. I've come to a different conclusion:

AI is the future of education."

In today's essay, Jason tackles a crucial issue in current instructional discourse around AI integration. As AI models become more agentive, AI trainers and literacy experts increasingly push instructors to split writing into two separate processes: planning vs. implementation, or as Jason puts it, "thinking" vs. "doing." But here's the thing – a century of writing pedagogy (as I discussed in my October 2023 post) tells us that writing itself is a mode of thinking.

I can't wait for you all to read this article! Please feel free to connect with Jason – beyond diving into deep philosophical questions about AI pedagogy, he's doing cutting-edge work developing instructional designs with his Berkeley College students.

If you are interested in reading more about AI Agents, check out my series from the summer:

“The Value of Doing Things: What AI Agents Mean for Teachers”

AI Agents make me nervous. Really nervous.

I wish they didn’t.

I wish I could write that the last two years have made me more confident, more self-assured that AI is here to augment workers rather than replace them.

But I can’t.

I wish I could write that I know where schools and colleges will end up. I wish I could say that AI Agents will help us get where we need to be.

But I can’t.

At this point, today, I’m at a loss. I’m not sure where the rise of AI agents will take us, in terms of how we work and learn. I’m in the question-asking part of my journey. I have few answers.

So, let’s talk about where (I think) AI Agents will take education. And who knows? Maybe as I write I’ll come up with something more concrete.

It’s worth a shot, right?

Anthropic’s Announcement in Context

AI Agents aren’t new. We’ve known for a long time that AI programs would not be contained by our browsers and that we’d soon have programs that could jump between programs and take over our computers.

In fact, HyperWrite’s AI Agent has been up and running for about a year.

So when Anthropic announced Claude’s new Computer Use, it wasn’t doing anything new. But still, for many, it was a bit shocking. Here’s the demo:

We’ll be able to give Claude a task and it will (1) break the task up into subtasks and (2) take over our computer to complete those subtasks.

Even in the demo itself, the AI Agent is clunky. It gets distracted and needs a lot of redirection.

But I suspect that the technology will advance quickly. For one, the market only has a few players at the moment. Things tend to speed up when competition picks up.

For another, a well-functioning AI Agent (in my eyes) seems like a tantalizing option for many companies. If a company could directly offload a task or job to an AI Agent, it would bring almost immediate financial gain. It would allow companies to give human tasks to AI Agents.

In other words, I suspect that the rise of AI Agents will put pressure on the “AI is here to augment us, not replace us” thesis. And between you and me, I don’t think that thesis will hold up well.

A good portion of AI advancement will come down to employee replacement. And AI Agents push companies towards that.

And who knows? Maybe Jensen Huang, the founder and CEO of Nvidia, is right. Maybe workers will soon have access to 2,000 brilliant minds to help complete their work. Maybe our students will be a part of that future, and they’ll have thousands of different AI Agents that they can direct.

What does education even look like in this context?

AI Agents and Education

The rise of AI Agents will put a lot of pressure on education. Once this technology becomes improved and more widely accessible, students will have yet another option for offloading cognitive tasks.

Leon Furze had a similar idea:

For a student, this will be quite tempting. Rather than prompting an AI program like ChatGPT or Claude and then refining and revising the output before submitting it, students can simply hand off the entire set of tasks to an AI Agent.

More use cases will emerge as we are exposed to AI Agents more. Leon’s idea is probably just the tip of the iceberg.

I don’t have answers for how to respond to this possibility as a teacher.

I only have questions:

How can we encourage students to see the value of doing work by themselves, even if an AI program could do it faster (and maybe even better)?

What are the effects of this technology going to be on student motivation?

Are AI Agents going to kill the idea of “AI-proofing” assessments?

Will this be even more immediately disruptive than the rise of ChatGPT?

These are the questions driving my thought process right now.

Behind these questions lies a more abstract, more philosophical one: what is the relationship between thinking and doing in a world of AI Agents and other kinds of automation?

The Thinking/Doing Dichotomy

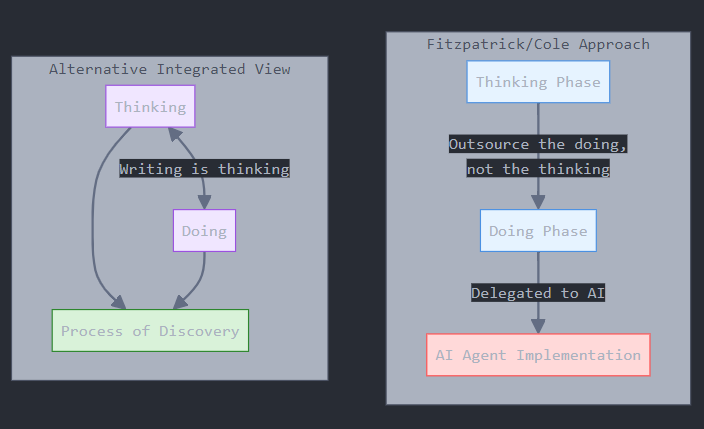

As soon as I watched the video for Claude’s Computer Use feature, I thought back to Dan Fitzpatrick’s The AI Classroom (2023). In particular, Nicholas Cole has an essay in which he argues that we need to focus less on actually doing things, and more on knowing how to delegate that doing to a bot.

Dan captures it quite succinctly, in an image he posted on LinkedIn that same year:

“Outsource the doing, not the thinking.” That’s the idea that, without really trying, jumped back into the forefront of my mind.

I do want to put pressure on this idea.

To what extent are thinking and doing separate enterprises?

The answer depends on context. For some activities, we can think beforehand or we can think as we watch AI Agents perform actions.

But for many activities, doing is central to thinking.

For years, I’ve taught writing as a process of discovery. My students come in thinking of writing as linear: they think, decide what their opinion is, and then they just express that opinion. I had a similar idea all throughout high school.

It wasn’t until college that I realized that writing was not merely a way to express my ideas. It was a way to arrive at, refine, and put pressure on those ideas. Writing is thinking.

Now, of course, that’s just a more specific, more teacherly way of stating something even more fundamental to how humans process. Many times, we think through doing something.

Thinking and doing aren’t separate.

So, what happens when students have more and more chances to offload the “doing” to a bot?

A Human-Centered Approach

As I’ve mentioned, I don’t have many answers here.

All I really know is that we need to start rearticulating the value of process (of doing the task ourselves) even if students can easily ask an AI Agent to do the same task.

But I do see one path forward. It’s to stop putting AI front and center in our discourse.

I see this question A LOT on social media: “Is it worth assigning our students something that AI can do?”

I get it. I really do.

But one problem is that what “AI can do” is shifting constantly.

Another problem I have with this formulation: we should keep humans front and center, not AI. I much prefer this question: “Is doing this valuable for our students?” It’s certainly connected, but it focuses less on what an AI could do and more on how a particular process could help a student grow.

This way, we don’t let the capabilities of a bot control the value of human action.

It’s far from a new question. We should have been asking it for years. And the increasing access to Generative AI has forced us to ask it more and more.

My Burning Questions

On theme, I’ll end with some of my biggest questions on this topic.

What does this all mean for how we move forward as educators?

How can educators strike a balance, so that we’re encouraging students to see the value of the process but also not simply giving them work that a bot could do?

Where does agency fit into the picture? How can we give students control over the process (and maybe even the assignments), and will this help them lean into the hard work of learning?

Image for Thought!!!

Figure 1. Here Nick uses Claude to envision Jason’s revision to Fitzpatrick's and Cole’s AI work cycles.

Introducing Two AI Literacy Courses for Educators

Pragmatic AI for Educators (Pilot Program)

Basic AI classroom tools

Cost: $20

Pragmatic AI Prompting for Advanced Differentiation

Advanced AI skills for tailored instruction

Cost: $200

Free 1-hour AI Literacy Workshop for Schools that Sign-Up 10 or More Faculty or Staff!!!

Free Offer:

30-minute strategy sessions

Tailored course implementation for departments, schools, or districts

Practical steps for AI integration

Interested in enhancing AI literacy in your educational community? Contact nicolas@pragmaticaisolutions.net to schedule a session or learn more.

Check out some of my favorite Substacks:

Mike Kentz’s AI EduPathways: Insights from one of our most insightful, creative, and eloquent AI educators in the business!!!

Terry Underwood’s Learning to Read, Reading to Learn: The most penetrating investigation of the intersections between compositional theory, literacy studies, and AI on the internet!!!

Suzi’s When Life Gives You AI: An cutting-edge exploration of the intersection among computer science, neuroscience, and philosophy

Alejandro Piad Morffis’s Mostly Harmless Ideas: Unmatched investigations into coding, machine learning, computational theory, and practical AI applications

Amrita Roy’s The Pragmatic Optimist: My favorite Substack that focuses on economics and market trends.

Michael Woudenberg’s Polymathic Being: Polymathic wisdom brought to you every Sunday morning with your first cup of coffee

Rob Nelson’s AI Log: Incredibly deep and insightful essay about AI’s impact on higher ed, society, and culture.

Michael Spencer’s AI Supremacy: The most comprehensive and current analysis of AI news and trends, featuring numerous intriguing guest posts

Daniel Bashir’s The Gradient Podcast: The top interviews with leading AI experts, researchers, developers, and linguists.

Daniel Nest’s Why Try AI?: The most amazing updates on AI tools and techniques

Riccardo Vocca’s The Intelligent Friend: An intriguing examination of the diverse ways AI is transforming our lives and the world around us.

Jason Gulya’s The AI Edventure: An important exploration of cutting edge innovations in AI-responsive curriculum and pedagogy.

I was really excited to write this for the newsletter!

Great questions, Jason. You won't be surprised to know that I, too, am suspicious of the distinction between doing and thinking. There is no better example than distinguishing between thinking and writing. I have what seems like absolutely brilliant thoughts in the shower. And when I start to write them down, I enter into a process in which the thinking and doing are integrated, and more challenging.

Nick has a post from a few months ago that I need to find that contrasts "automaticity" and agency. These features that do things by "taking over our computers" are not actually the actions of AI agents. That is an illusion. They are complex and flexible automations, and we should take care when using them, especially in situations where humans can be hurt by the results.

If we have learned anything about the automation of knowledge work in the past five years, it is that the more important and socially valuable the task, the more careful we should be in ensuring human judgement is guiding the work. Humans are not perfect, but at least they have the capacity to think about what they are doing.